PyAudio - 前几个录音块为零

我在尝试同步播放设备或从设备(在本例中为我的笔记本电脑扬声器和麦克风)录制音频时遇到了一些问题。

问题

我尝试使用Python模块来实现这个:“sounddevice”和“pyaudio”;但这两种实现都有一个奇怪的问题,即录制的音频的前几帧始终为零。还有其他人遇到过此类问题吗?这个问题似乎与所使用的块大小无关(即,其样本数量始终为零)。

我能做些什么来防止这种情况发生吗?

代码

import queue

import matplotlib.pyplot as plt

import numpy as np

import pyaudio

import soundfile as sf

FRAME_SIZE = 512

excitation, fs = sf.read("excitation.wav", dtype=np.float32)

# Instantiate PyAudio

p = pyaudio.PyAudio()

q = queue.Queue()

output_idx = 0

mic_buffer = np.zeros((excitation.shape[0] + FRAME_SIZE

- (excitation.shape[0] % FRAME_SIZE), 1))

def rec_play_callback(in_data, framelength, time_info, status):

global output_idx

# print status of playback in case of event

if status:

print(f"status: {status}")

chunksize = min(excitation.shape[0] - output_idx, framelength)

# write data to output buffer

out_data = excitation[output_idx:output_idx + chunksize]

# write input data to input buffer

inputsamples = np.frombuffer(in_data, dtype=np.float32)

if not np.sum(inputsamples):

print("Empty frame detected")

# send input data to buffer for main thread

q.put(inputsamples)

if chunksize < framelength:

out_data[chunksize:] = 0

return (out_data.tobytes(), pyaudio.paComplete)

output_idx += chunksize

return (out_data.tobytes(), pyaudio.paContinue)

# Define playback and record stream

stream = p.open(rate=fs,

channels=1,

frames_per_buffer=FRAME_SIZE,

format=pyaudio.paFloat32,

input=True,

output=True,

input_device_index=1, # Macbook Pro microphone

output_device_index=2, # Macbook Pro speakers

stream_callback=rec_play_callback)

stream.start_stream()

input_idx = 0

while stream.is_active():

data = q.get(timeout=1)

mic_buffer[input_idx:input_idx + FRAME_SIZE, 0] = data

input_idx += FRAME_SIZE

stream.stop_stream()

stream.close()

p.terminate()

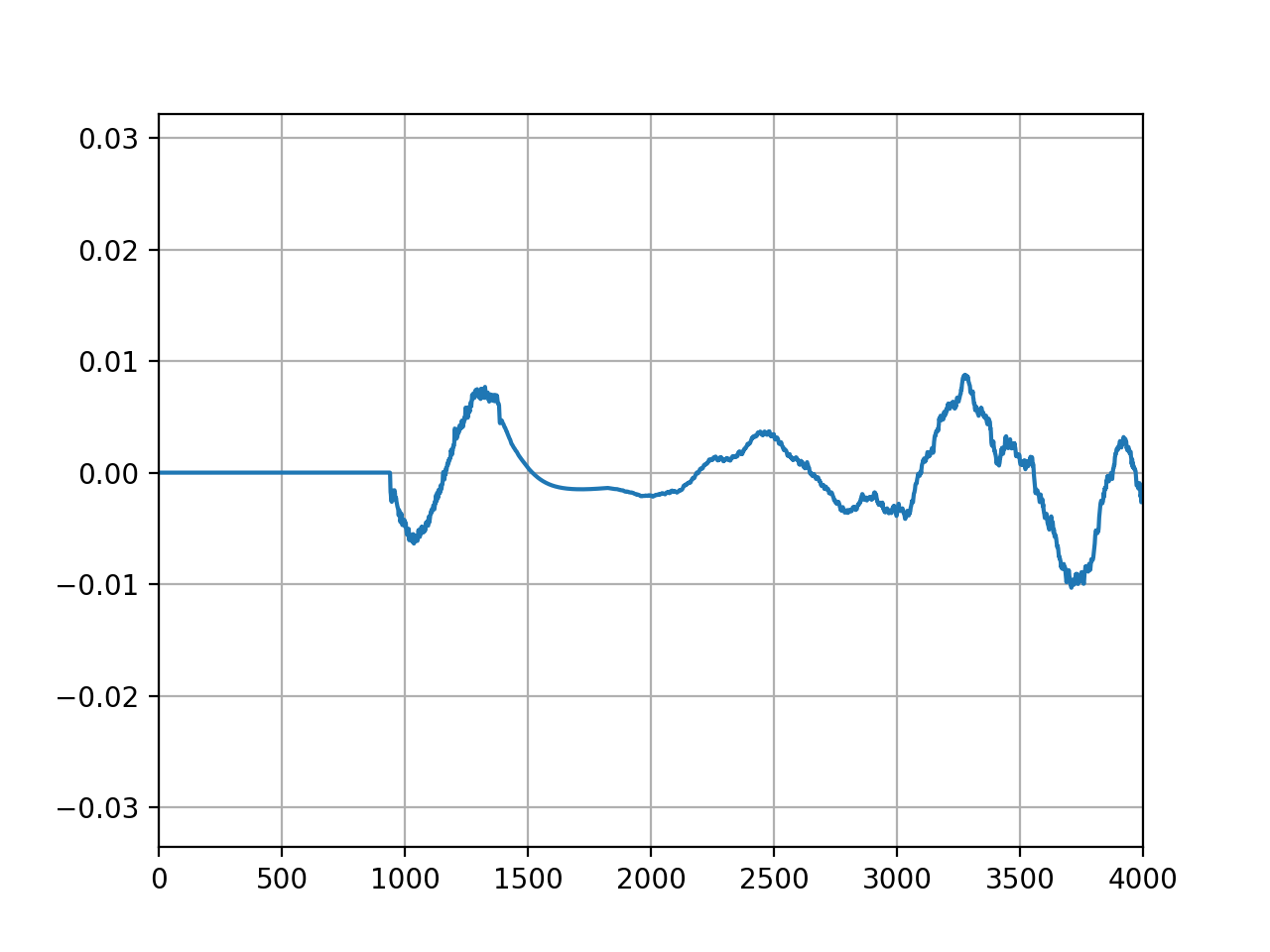

# Plot captured microphone signal

plt.plot(mic_buffer)

plt.show()

输出

检测到空帧

编辑:使用 CoreAudio 在 MacOS 上运行它。正如 @2e0byo 所指出的,这可能是相关的。

I've been having some issues when trying to synchronously playback and record audio to/from a device, in this case, my laptop speakers and microphone.

The problem

I've tried to implement this using the Python modules: "sounddevice" and "pyaudio"; but both implementations have this weird issue where the first few frames of recorded audio are always zero. Has anyone else experienced this type of issue? This issue seems to be independent of the chunksize that is used (i.e., its always the same amount of samples being zero).

Is there anything I can do to prevent this from happening?

Code

import queue

import matplotlib.pyplot as plt

import numpy as np

import pyaudio

import soundfile as sf

FRAME_SIZE = 512

excitation, fs = sf.read("excitation.wav", dtype=np.float32)

# Instantiate PyAudio

p = pyaudio.PyAudio()

q = queue.Queue()

output_idx = 0

mic_buffer = np.zeros((excitation.shape[0] + FRAME_SIZE

- (excitation.shape[0] % FRAME_SIZE), 1))

def rec_play_callback(in_data, framelength, time_info, status):

global output_idx

# print status of playback in case of event

if status:

print(f"status: {status}")

chunksize = min(excitation.shape[0] - output_idx, framelength)

# write data to output buffer

out_data = excitation[output_idx:output_idx + chunksize]

# write input data to input buffer

inputsamples = np.frombuffer(in_data, dtype=np.float32)

if not np.sum(inputsamples):

print("Empty frame detected")

# send input data to buffer for main thread

q.put(inputsamples)

if chunksize < framelength:

out_data[chunksize:] = 0

return (out_data.tobytes(), pyaudio.paComplete)

output_idx += chunksize

return (out_data.tobytes(), pyaudio.paContinue)

# Define playback and record stream

stream = p.open(rate=fs,

channels=1,

frames_per_buffer=FRAME_SIZE,

format=pyaudio.paFloat32,

input=True,

output=True,

input_device_index=1, # Macbook Pro microphone

output_device_index=2, # Macbook Pro speakers

stream_callback=rec_play_callback)

stream.start_stream()

input_idx = 0

while stream.is_active():

data = q.get(timeout=1)

mic_buffer[input_idx:input_idx + FRAME_SIZE, 0] = data

input_idx += FRAME_SIZE

stream.stop_stream()

stream.close()

p.terminate()

# Plot captured microphone signal

plt.plot(mic_buffer)

plt.show()

Output

Empty frame detected

Edit: running this on MacOS using CoreAudio. This might be relevant, as pointed out by @2e0byo.

如果你对这篇内容有疑问,欢迎到本站社区发帖提问 参与讨论,获取更多帮助,或者扫码二维码加入 Web 技术交流群。

绑定邮箱获取回复消息

由于您还没有绑定你的真实邮箱,如果其他用户或者作者回复了您的评论,将不能在第一时间通知您!

发布评论

评论(2)

这是一个普遍问题,我们缺少对您的架构的完整了解。所以我们能做的就是指出一些一般概念。

在数字信号处理系统中,处理后的信号中经常存在前导空白和恒定延迟。这通常与缓冲区的大小和采样率有关。在某些系统中,您甚至可能不知道缓冲区的存在,例如作为用户级 API 无法访问的设备驱动程序的一部分。

要减少缓冲造成的偏移,您必须减小缓冲区或加快采样速度。然后,您的系统必须更频繁地处理较小的数据包,并且数据包大小或采样时钟的变化可能会影响您的信号处理,具体取决于您的信号内容和您正在进行的信号处理类型。因此,进行这些更改中的任何一个都会增加系统处理的每个数据的开销,并且还可能以其他方式影响性能。

我用于调试此类问题的一种方法是尝试找到设置偏移量的缓冲区,如果需要,可以跟踪源代码,然后查看是否可以调整大小或采样率并仍然实现所需的性能在吞吐量和准确性方面。

This is a general question, and we are missing a complete view of your architecture. So the best we can do is point to some general concepts.

In digital signal processing systems, there is very often a leading blank and a constant delay in the processed signal. This is most often related to the size of a buffer and the sampling rate. In some systems you may not even be aware that the buffer is there, for example as part of a device driver that is not accessible to the user level API.

To reduce an offset due to buffering, you have to make the buffer smaller or sample faster. Your system then has to process smaller packets but more often, and changes in either packet size or sampling clock, can effect your signal processing, depending on your signal content and the kind of signal processing you are doing. So making either of these changes comes with an increase in the overhead per data processed through the system and may also effect performance in other ways.

An approach that I use for debugging problems of this sort is to try to find the buffer that is setting the offset, tracing through source code if needed, and then see whether you can adjust size or sampling rate and still achieve the performance that you need in terms of throughput and accuracy.

为了未来的自己。

找到了解决方法。在

stream.start_stream()之后,添加If you value the one non-zero chunk that终止循环,存储它:

之后,

v是的一个实例bytes,长度取决于流格式。由于问题中它是 32 位浮点数,因此len(v)为 4。For my future self.

Got a workaround. After

stream.start_stream(), addIf you value the one non-zero chunk that terminates the loop, store it:

After that,

vis an instance ofbytes, the length depends on the stream format. As it's a 32-bit float in the question,len(v)is 4.