“客户端-服务器”性能问题

我有一个“排队理论”问题,其中需要完成以下工作:

- 开发一个客户端以固定速率向服务器发送固定大小的连续数据包

- 服务器必须对这些数据包进行排队并在处理这些数据包之前对它们进行排序

- 然后我们需要证明(对于某些数据包大小为“n”字节,速率为“r”MBps)理论观察结果是排序(n log n / CPU_FREQ)比排队(n / r)更快,因此 QUEUE 根本不应该建立。

但是,我发现队列总是在建立(在两个系统上运行 - 客户端和服务器 PC/笔记本电脑),

注意:当我在同一系统上运行进程时,队列不会建立,并且大多数时候,它是关闭的1 - 20 个数据包。

需要有人检查/审查我的代码。

代码粘贴在此处:

客户端(单类):

服务器(多个类文件包:serverClasses:

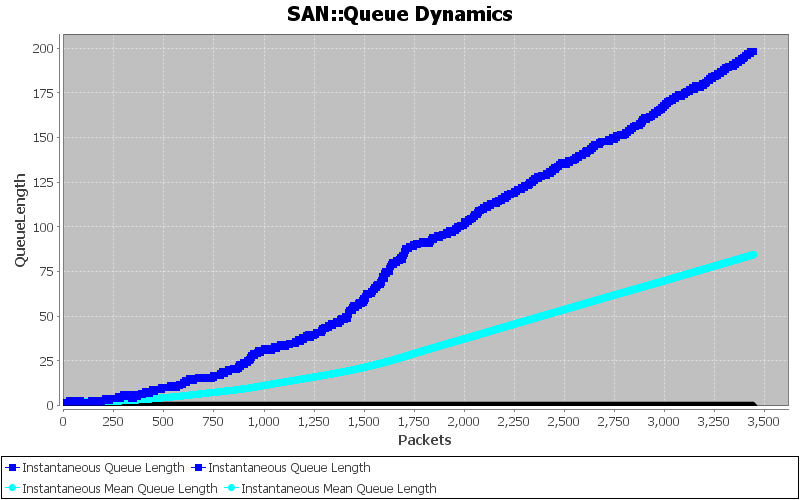

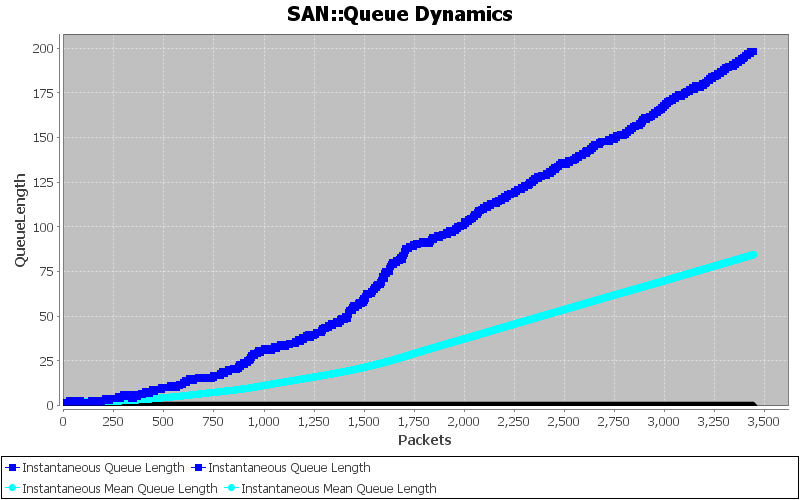

示例图10MBps 和 10000 字节大小的数据包的“QUEUE_LEN 与 #PACKETS”,持续时间为 30 - 35 秒

I have a "Queuing Theory" problem where following are to be done:

- Develop a CLIENT to send continuous packets of fixed size to SERVER at fixed rate

- SERVER has to queue these packets and SORT them before handling these packets

- Then we need to prove (for some packet size 'n' bytes and rate 'r' MBps) the theoretical observation that sorting(n log n / CPU_FREQ) happens faster than queuing (n / r), and thus the QUEUE should not build up at all.

However, I find that Queue is always building up (running on two systems - client and server PCs/Laptops),

Note: When I run the processes on the same system, then Queue doesnt build and most of the time, it is down close to 1 - 20 packets.

Need someone to check/review my code.

Code is pasted here:

Client (Single Class):

Server (Multiple Class files Package: serverClasses:

- Main: http://pastebin.com/embed_iframe.php?i=BgZzfiTQ

- Sorting: http://pastebin.com/embed_iframe.php?i=mPh8zgqC

- ServerThreadPerClient: http://pastebin.com/embed_iframe.php?i=ZpTqpHnX

- GlobalStatistics: http://pastebin.com/embed_iframe.php?i=Q2DJLvaV

Sample Graph for "QUEUE_LEN Vs. #PACKETS" for 10MBps and 10000 Byte sized packets for a duration of 30 - 35 secs

如果你对这篇内容有疑问,欢迎到本站社区发帖提问 参与讨论,获取更多帮助,或者扫码二维码加入 Web 技术交流群。

绑定邮箱获取回复消息

由于您还没有绑定你的真实邮箱,如果其他用户或者作者回复了您的评论,将不能在第一时间通知您!

发布评论

评论(1)

在我看来,在客户端,

timeinterval始终为 0。这是意图吗?您在代码中说了秒,但缺少* 1000。然后调用 Thread.sleep((long) timeinterval) 。由于

sleep()需要long那么这最多会休眠 1ms,通常(我怀疑)会休眠 0ms。睡眠只有毫秒的分辨率。如果你想要纳秒级的分辨率,那么你必须这样做:我怀疑当客户端和服务器都在同一个机器上时,你的 CPU 限制了你的运行。当它们位于不同的盒子上时,事情就会备份,因为客户端实际上由于不正确的睡眠时间而淹没了服务器。

至少这是我最好的猜测。

On the client it looks to me that the

timeintervalis always going to be 0. Was that the intension? You say seconds in the code but you are missing the* 1000.And then you call

Thread.sleep((long) timeinterval). Sincesleep()takeslongthen this will at most sleep 1ms and usually (I suspect) sleep 0ms. Sleep only has a millisecond resolution. If you want nanoseconds resolution then you'll have to do something like:I suspect that your CPU is limiting your runs when both the client and the server are on the same box. When they are on different boxes then things back up because the client is in effect flooding the server because of the improper sleep times.

That's my best guess at least.