同时显示视频元素的多个帧

一些背景:这里是图形新手,刚刚通过 mrdoob 出色的 Three.js 在浏览器中涉足 3D 世界。我打算很快浏览 http://learningwebgl.com/ 上的所有内容:)

我我想知道人们将如何大致重新创建类似于以下内容的东西: http://yooouuutuuube.com/v/?width=192&height=120&vm=29755443&flux=0&direction=rand

的天真理解如下:

- 我对 yooouuutuuube 工作原理 大量位图数据(大于任何合理的浏览器窗口大小)。

- 根据目标视频帧的宽度/高度确定所需的行/列数(跨越整个 BitmapData 平面,而不仅仅是可见区域)

- 将像素从最近的视频帧复制到 BitmapData 上的某个位置(基于移动方向)

- 迭代 BitmapData 中的每个单元格,从其前面的单元格复制像素

- 沿相反方向滚动整个 BitmapData 以创建运动的幻觉,具有西洋镜类型的效果

我想在WebGL 与使用 Canvas 不同,因此我可以利用着色器进行后处理(噪声和颜色通道分离以模拟色差)。

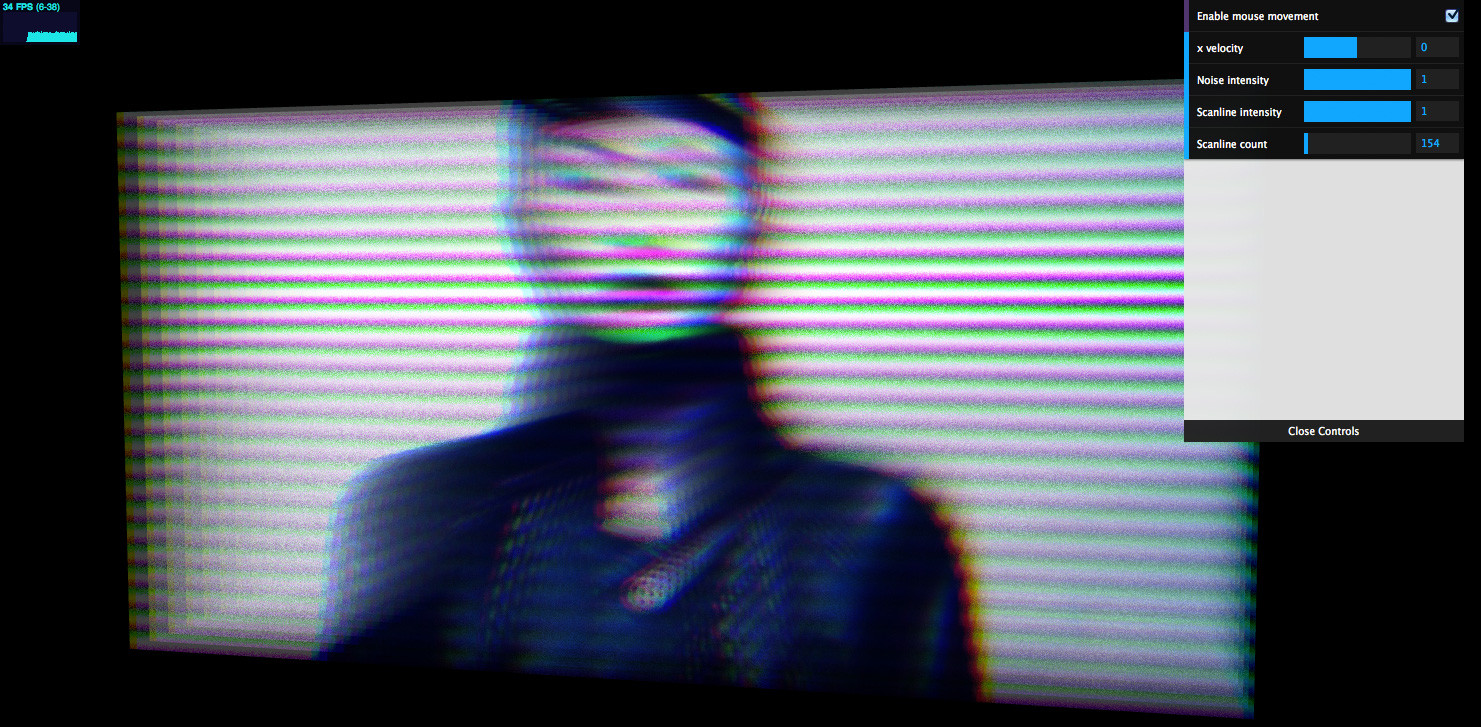

这是我到目前为止所拥有的屏幕截图:

- 三个视频(相同的视频,但分为 R、G 和B 通道)被绘制到画布 2D 上下文。每个视频都会稍微偏移,以伪造色差外观。

- 在 Three.JS 中创建引用此画布的纹理。该纹理在每个绘制周期都会更新。

- 在 Three.JS 中创建着色器材质,该材质链接到片段着色器(创建噪声/扫描线),

- 然后将该材质应用于多个 3D 平面。

这对于显示单帧视频来说效果很好,但我想看看是否可以一次显示多个帧而不需要添加额外的几何图形。

完成此类任务的最佳方式是什么?是否有任何我应该进一步详细研究/研究的概念?

Some background: graphics newbie here, have just dipped my toes into the world of 3D in the browser with mrdoob's excellent three.js. I intend to go through all the tuts at http://learningwebgl.com/ soon :)

I'd like to know how one would roughly go about re-creating something similar to:

http://yooouuutuuube.com/v/?width=192&height=120&vm=29755443&flux=0&direction=rand

My naive understanding of how yooouuutuuube works is as follows:

- Create a massive BitmapData (larger than any reasonable browser window size).

- Determine the number of required rows / columns (across the entire BitmapData plane, not just the visible area) based on the width/height of the target video frame

- Copy pixels from the most recent video frame to a position on the BitmapData (based on the direction of movement)

- Iterate through every cell in the BitmapData, copying pixels from the cell that precedes it

- Scroll the entire BitmapData in the opposite direction to create the illusion of movement, with a Zoetrope-type effect

I'd like to do this in WebGL as opposed to using Canvas so I can take advantage of post-processing using shaders (noise and color channel separation to mimic chromatic aberration).

Here's a screenshot what I have so far:

- Three videos (same video, but separated into R, G and B channels) are drawn to a canvas 2D context. Each video is slightly offset in order to fake that chromatic aberration look.

- A texture is created in Three.JS which references this canvas. This texture is updated every draw cycle.

- A shader material is created in Three.JS which is linked to a fragment shader (which creates noise / scanlines)

- This material is then applied to a number of 3D Planes.

This works just fine for showing single frames of video, but I'd like to see if I could show multiple frames at once without needing to add additional geometry.

What would be the optimal way of going about such a task? Are there any concepts that I should be studying/investigating in further detail?

如果你对这篇内容有疑问,欢迎到本站社区发帖提问 参与讨论,获取更多帮助,或者扫码二维码加入 Web 技术交流群。

绑定邮箱获取回复消息

由于您还没有绑定你的真实邮箱,如果其他用户或者作者回复了您的评论,将不能在第一时间通知您!

发布评论

评论(1)