BOOST ASIO multi io_service RPC框架设计RFC

我正在开发一个 RPC 框架,我想使用多 io_service 设计来将执行 IO(前端)的 io_objects 与执行 RPC 工作的线程(后端)解耦。 )。

前端应该是单线程,后端应该有线程池。我正在考虑一种设计,让前端和后端使用条件变量进行同步。然而,似乎 boost::thread 和 boost::asio 并没有融合——即,条件变量 async_wait 支持似乎不可用。我有一个关于此事的问题此处。

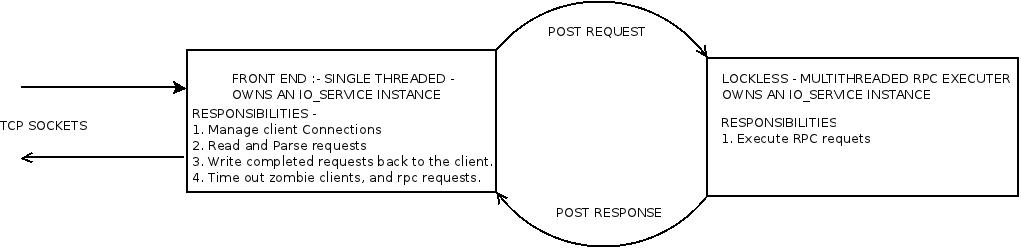

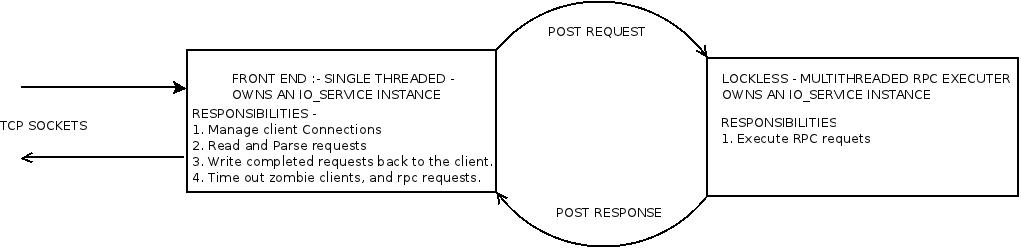

我想到 io_service::post() 可能用于同步两个 io_service 对象。我在下面附上了一张图表,我只是想知道我是否正确理解了 post 机制,以及这是否是一个明智的实现。

I am working on a RPC framework, I want to use a multi io_service design to decouple the io_objects that perform the IO (front-end) from the the threads that perform the RPC work (the back-end).

The front-end should be single threaded and the back-end should have a thread pool. I was considering a design to get the front-end and back-end to synchronise using a condition variables. However, it seems boost::thread and boost::asio do not comingle --i.e., it seems condition variable async_wait support is not available. I have a question open on this matter here.

It occured to me that io_service::post() might be used to synchronise the two io_service objects. I have attached a diagram below, I just want to know if I understand the post mechanism correctly, and weather this is a sensible implementation.

如果你对这篇内容有疑问,欢迎到本站社区发帖提问 参与讨论,获取更多帮助,或者扫码二维码加入 Web 技术交流群。

绑定邮箱获取回复消息

由于您还没有绑定你的真实邮箱,如果其他用户或者作者回复了您的评论,将不能在第一时间通知您!

发布评论

评论(2)

我假设您使用“a单个

io_service和一个调用io_service::run()的线程池”另外,我假设您的前端是单线程只是为了避免从多个线程写入同一套接字的竞争条件。

使用

io_service 可以实现相同的目标::strand(教程)。您的前端可以是MT 通过 io_service::strand 同步。从后端到前端的所有posts(以及从前端到前端的处理程序,如handle_connect等)都应该由strand包裹代码>,类似这样:后端->前端:

或前端-> front-end:

从前端到后端的所有帖子都不应该被

strand包裹。I assume that you use "a single

io_serviceand a thread pool callingio_service::run()"Also I assume that your frond-end is single-threaded just to avoid a race condition writing from multiple threads to the same socket.

The same goal can be achieved using

io_service::strand(tutorial).Your front-end can be MT synchronized byio_service::strand. Allpostsfrom back-end to front-end (and handlers from front-end to front-end likehandle_connectetc.) should be wrapped bystrand, something like this:back-end -> front-end:

or front-end -> front-end:

And all posts from front-end to back-end shouldn't be wrapped by

strand.如果您的后端是调用任何 io_service::run()、io_service::run_one()、io_service::poll()、io_service::poll_one() 函数的线程池,并且您的处理程序需要访问共享资源,那么您仍然需要注意以某种方式将这些共享资源锁定在处理程序本身中。

鉴于问题中发布的信息量有限,考虑到上述警告,我认为这会很好地工作。

但是,在发布时,设置必要的完成端口和等待会产生一些可测量的开销 - 您可以避免使用后端“队列”的不同实现。

在不知道您需要完成的具体细节的情况下,我建议您研究 的线程构建块管道或更简单地用于并发队列。

If you back-end is a thread pool calling any of the

io_service::run(), io_service::run_one(), io_service::poll(), io_service::poll_one()functions and your handler(s) require access to shared resources then you still have to take care to lock those shared resources somehow in the handler's themselves.Given the limited amount of information posted in the question, I would assume this would work fine given the caveat above.

However, when posting there is some measurable overhead for setting up the necessary completion ports and waiting -- overhead you could avoid using a different implementation of your back end "queue".

Without knowing the exact details of what you need to accomplish, I would suggest that you look into thread building blocks for pipelines or perhaps more simply for a concurrent queue.