检测照片中纸张角点的算法

检测照片中发票/收据/纸张的角点的最佳方法是什么?这将用于 OCR 之前的后续透视校正。

我目前的方法是:

RGB>灰色>使用阈值处理的 Canny 边缘检测 >膨胀(1)>去除小物体(6) >清除边界对象>根据凸面积选择大型博客。 > [角点检测 - 未实现]

我忍不住认为必须有一种更强大的“智能”/统计方法来处理这种类型的分割。我没有很多训练示例,但我可能可以收集 100 张图像。

更广泛的背景:

我正在使用 matlab 进行原型设计,并计划在 OpenCV 和 Tesserect-OCR 中实现该系统。这是我需要为此特定应用解决的许多图像处理问题中的第一个。因此,我希望推出自己的解决方案并重新熟悉图像处理算法。

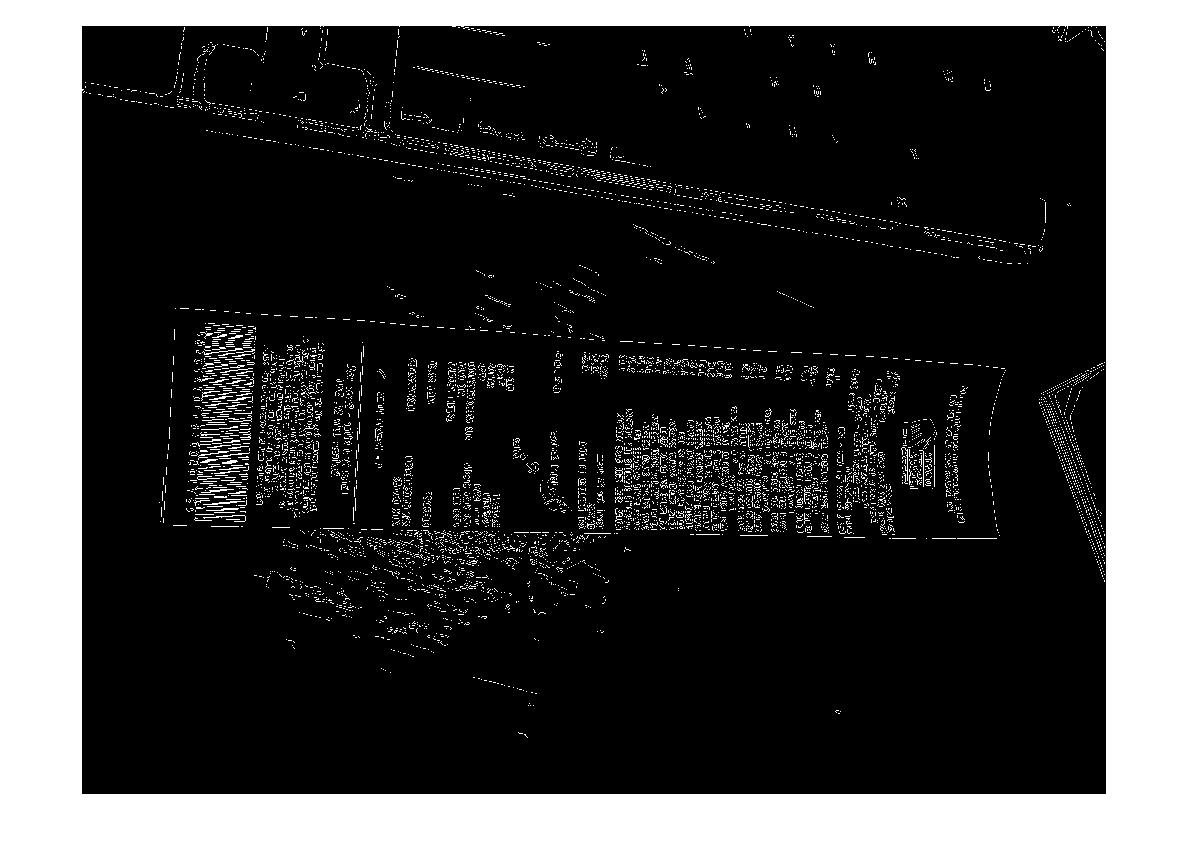

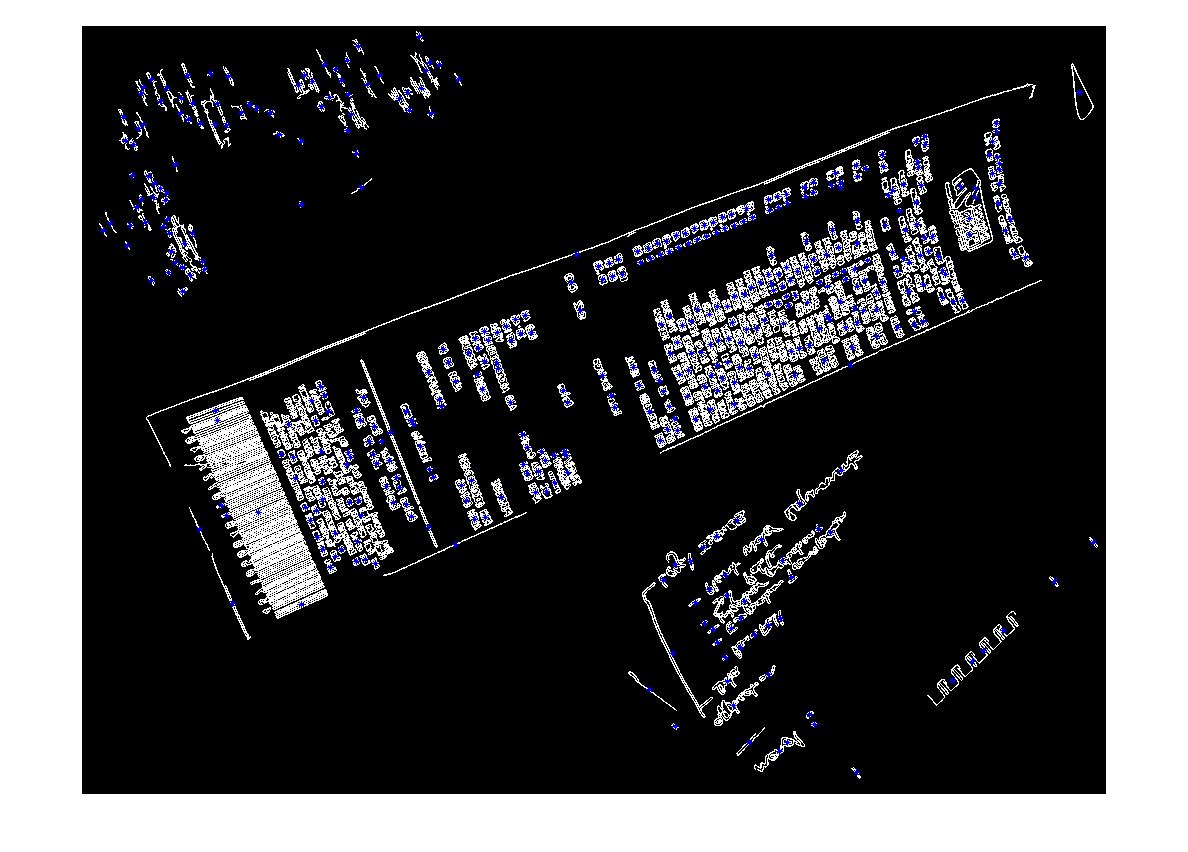

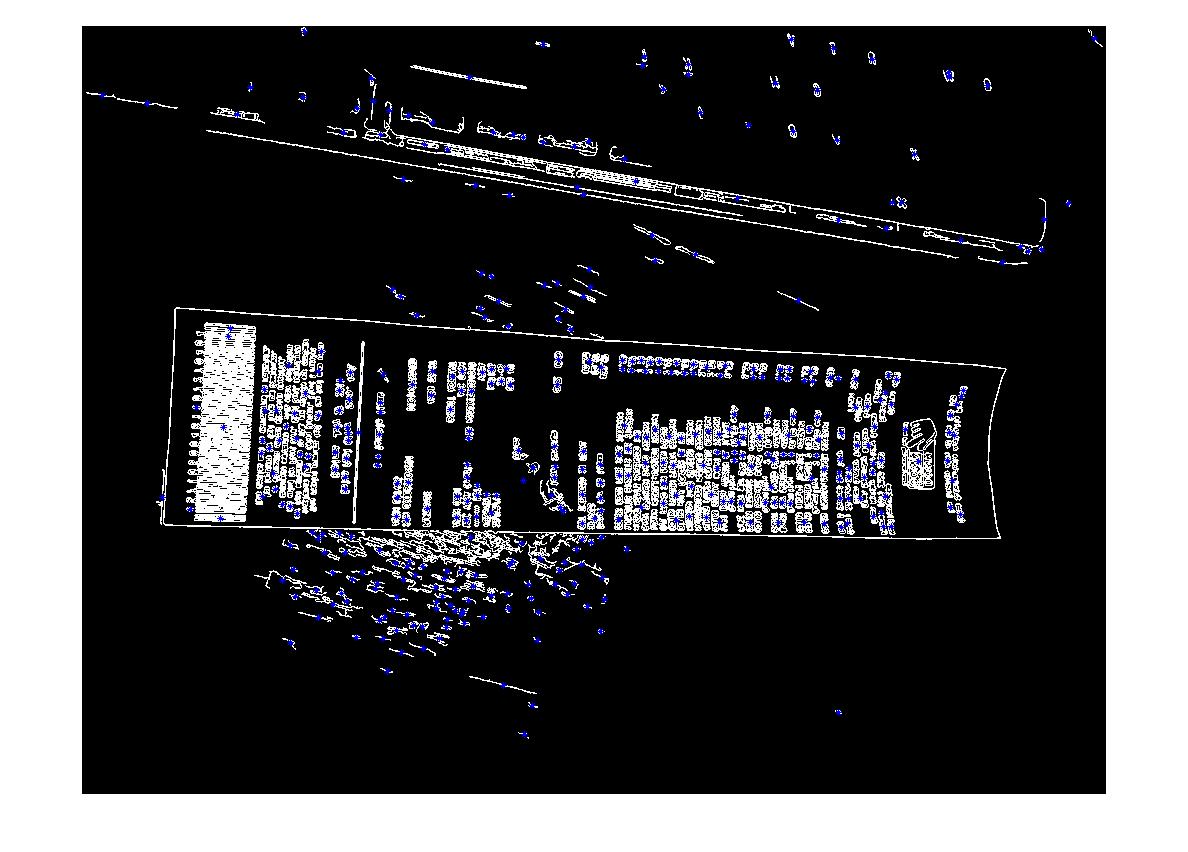

以下是我希望算法处理的一些示例图像:如果您想接受挑战,大图像位于 http://madteckhead.com/tmp

(来源:madteckhead.com)

(来源:madteckhead.com)

(来源:madteckhead.com)

(来源:madteckhead.com)

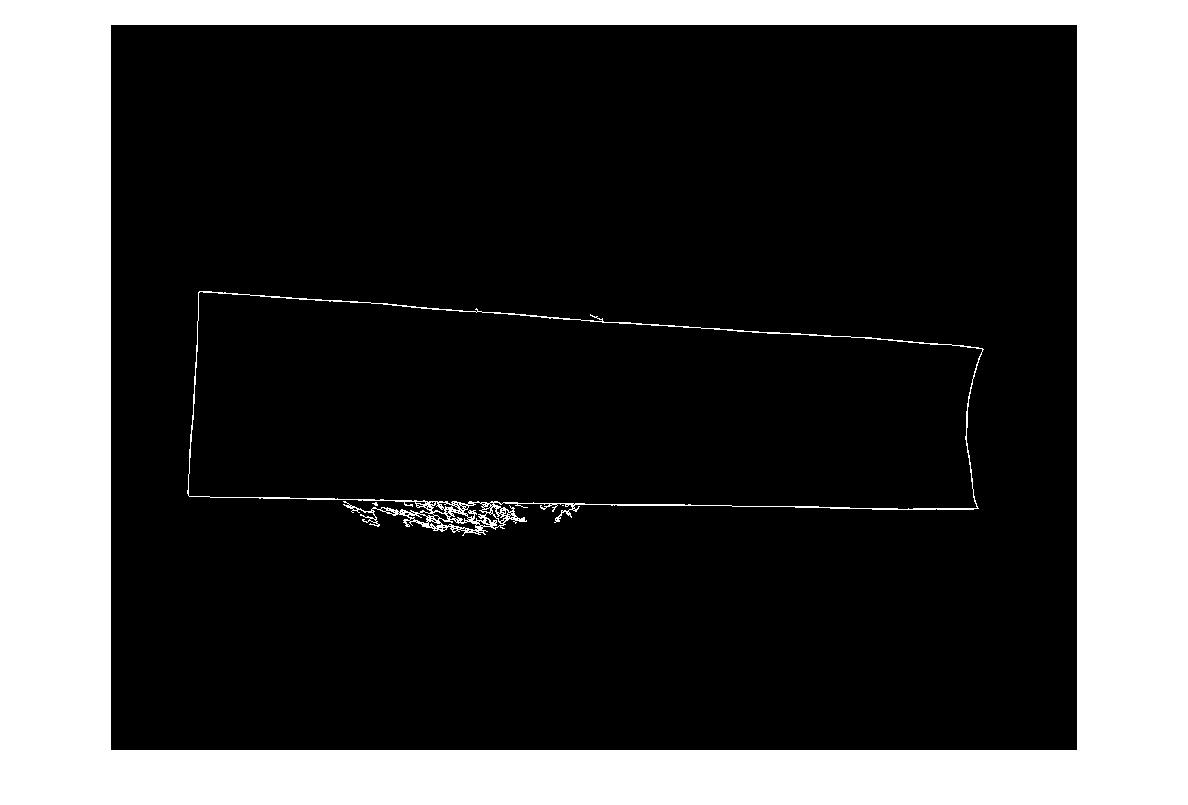

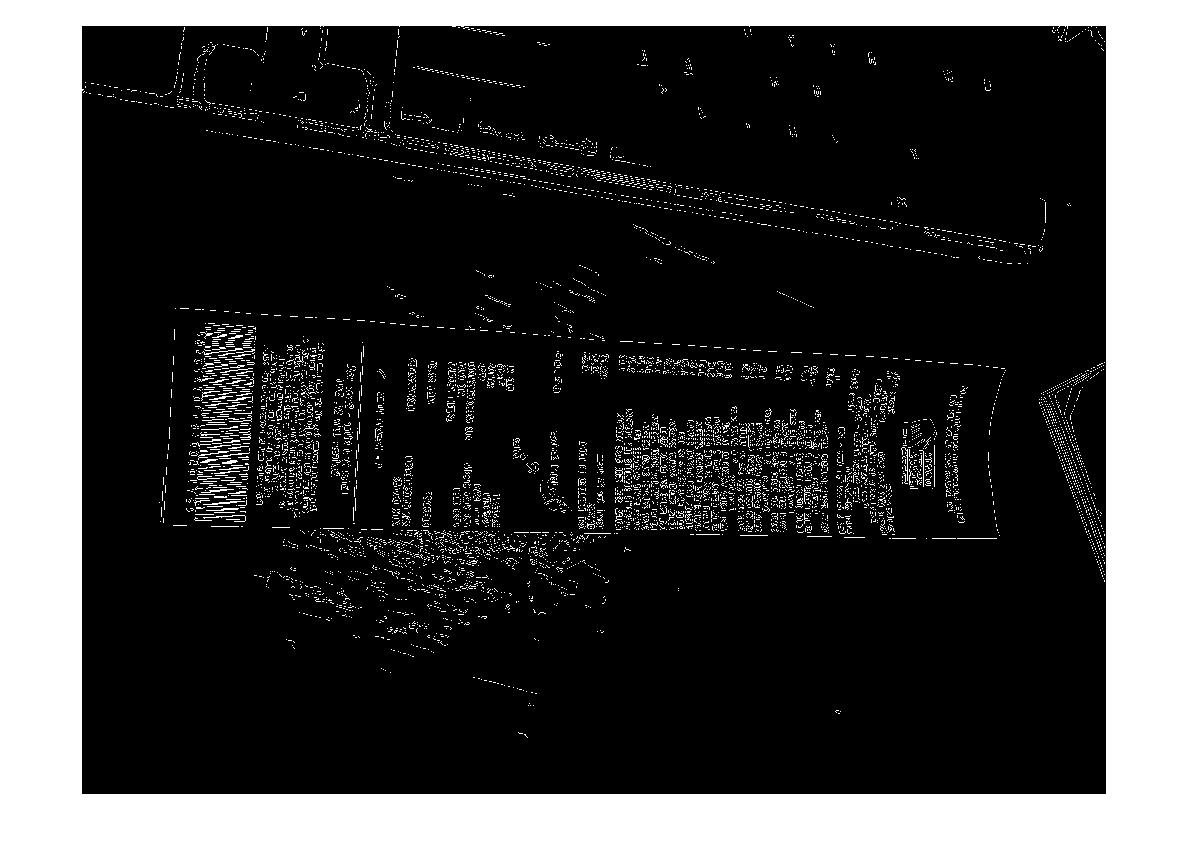

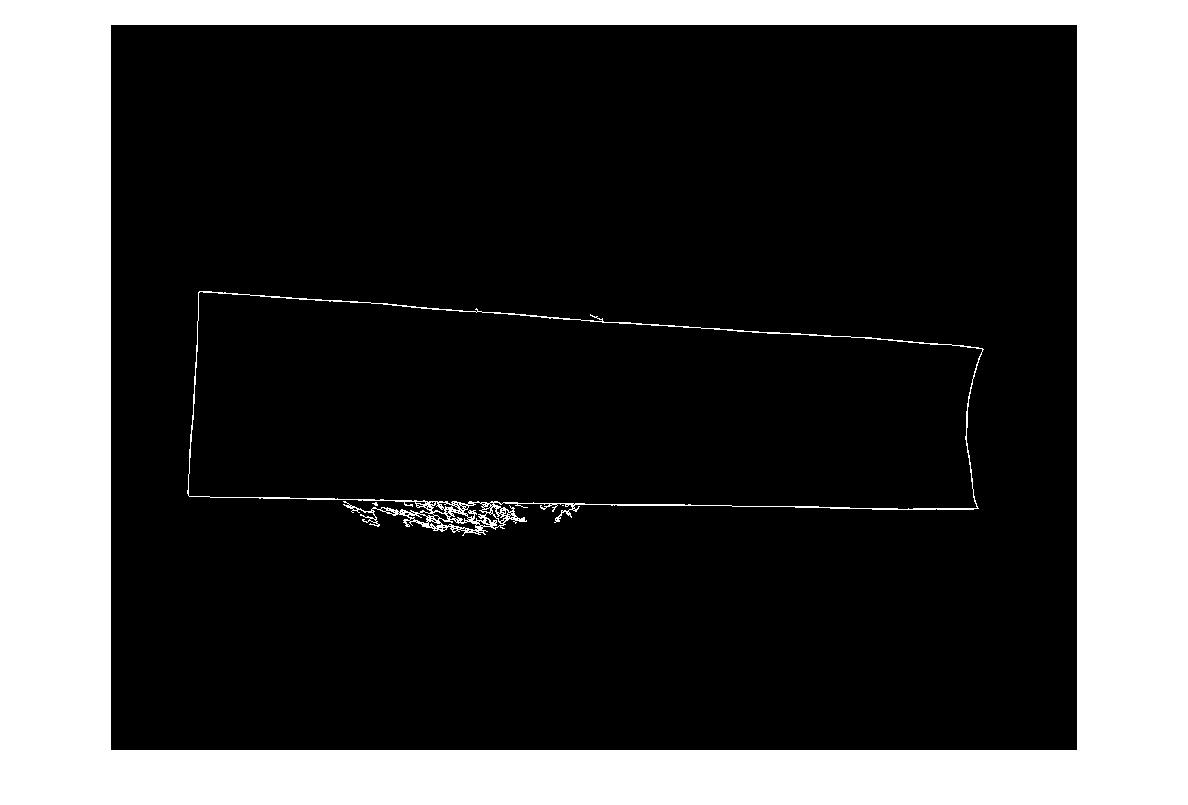

在最好的情况下,这给出:

(来源:madteckhead.com)

(来源:madteckhead.com)

(来源:madteckhead.com)

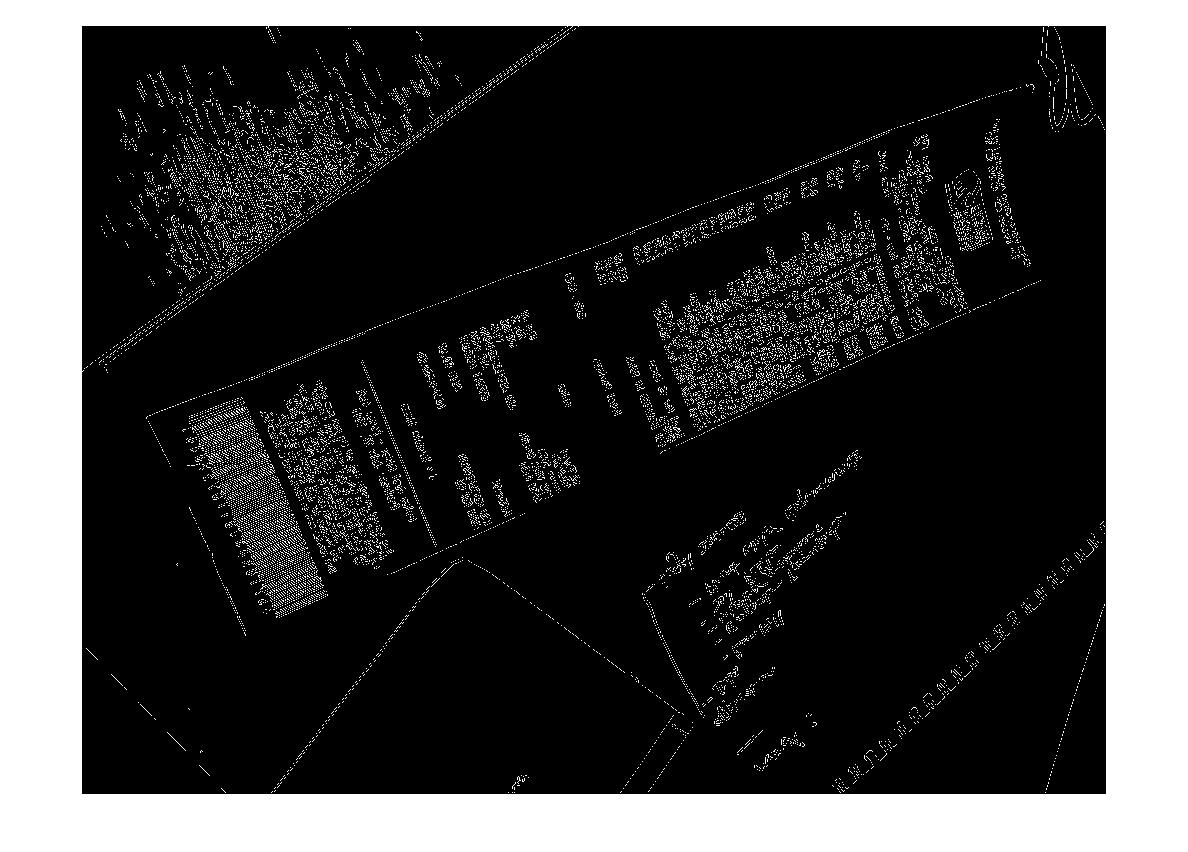

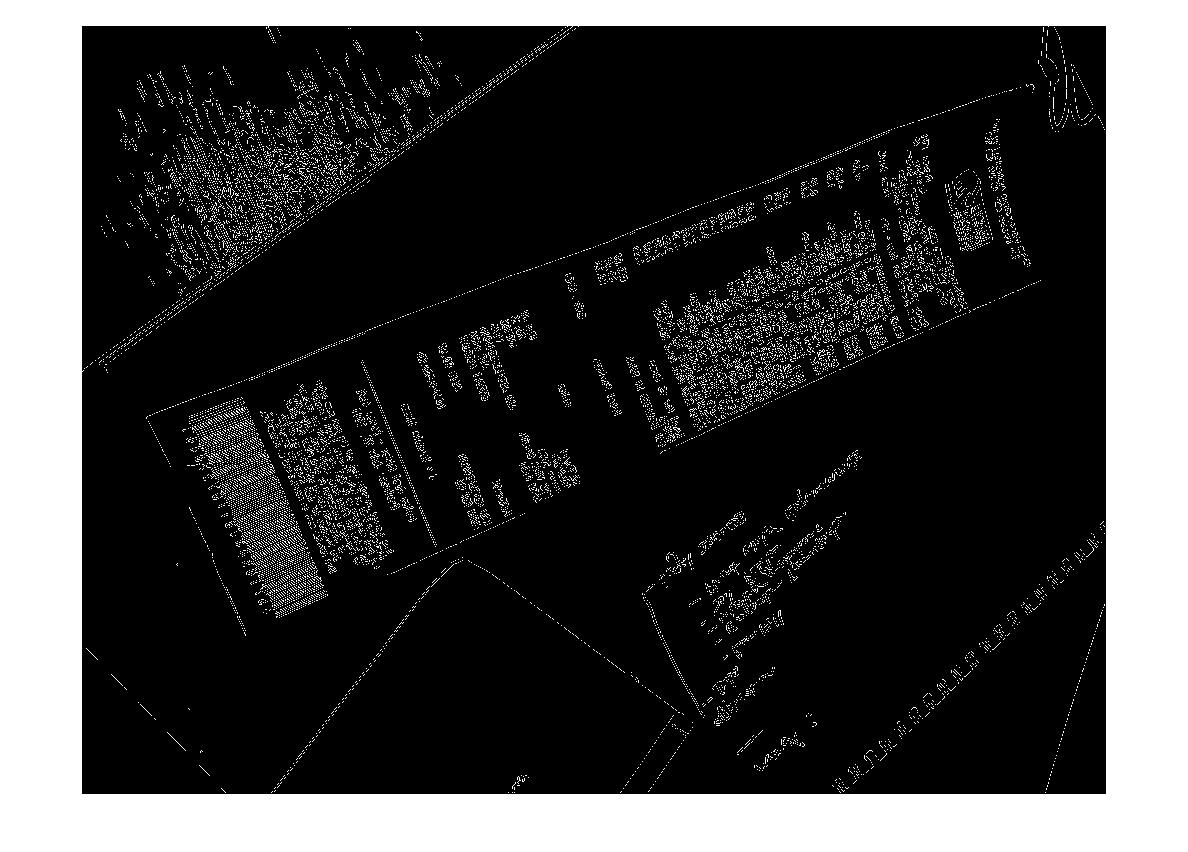

但是在其他情况下很容易失败:

(来源:madteckhead.com)

(来源:madteckhead.com)

(来源:madteckhead.com)

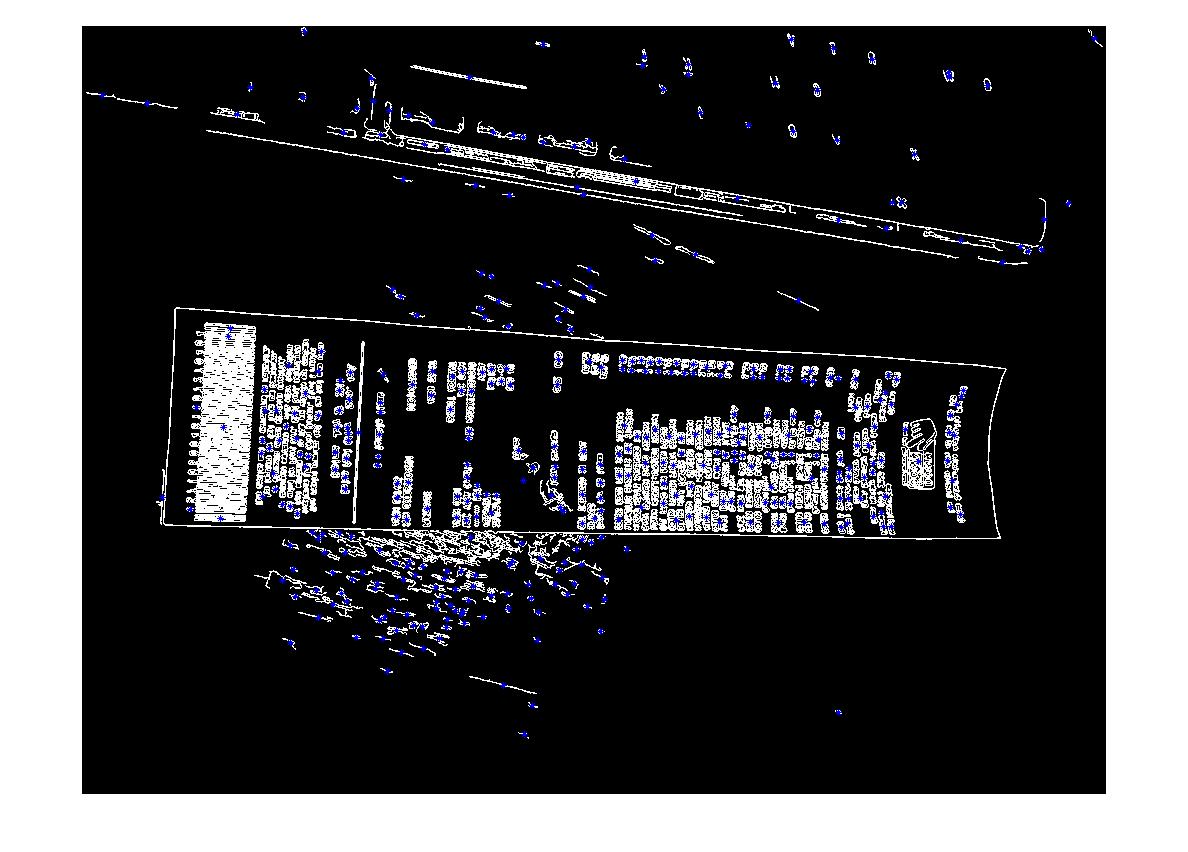

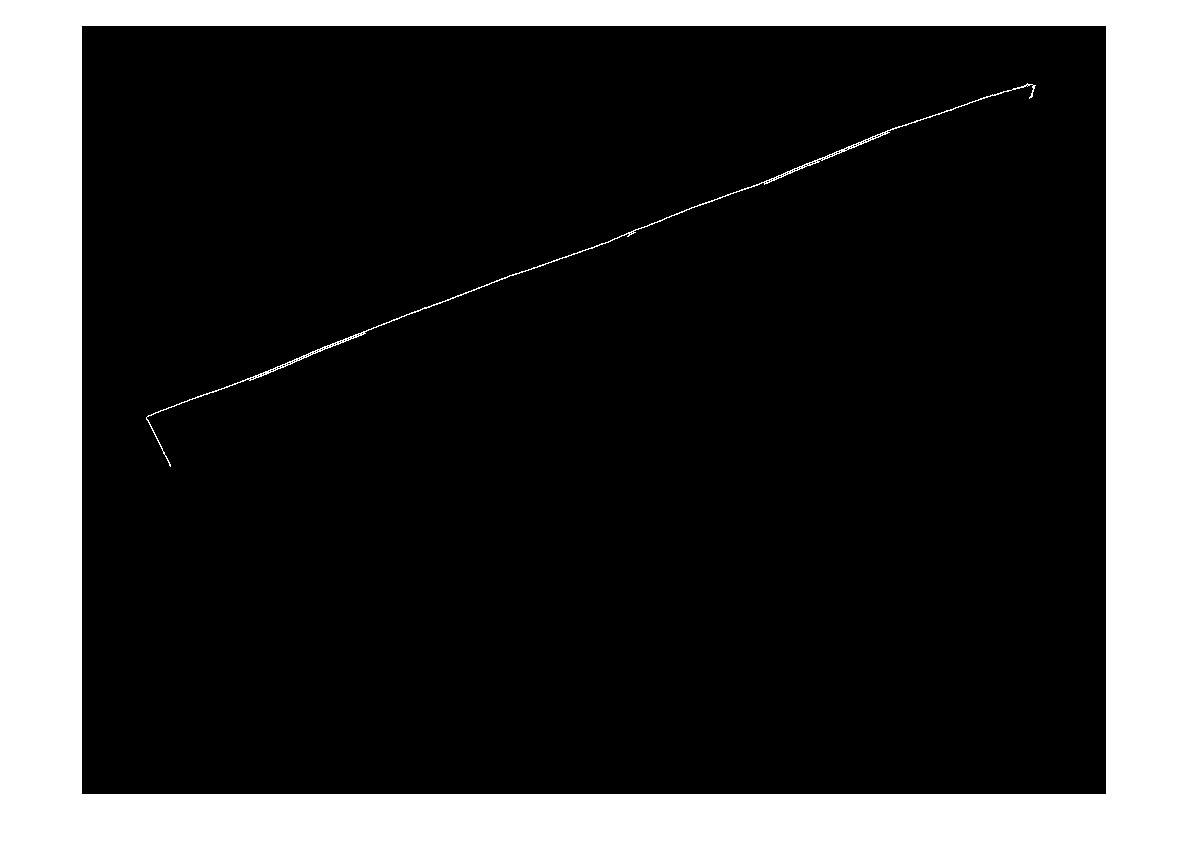

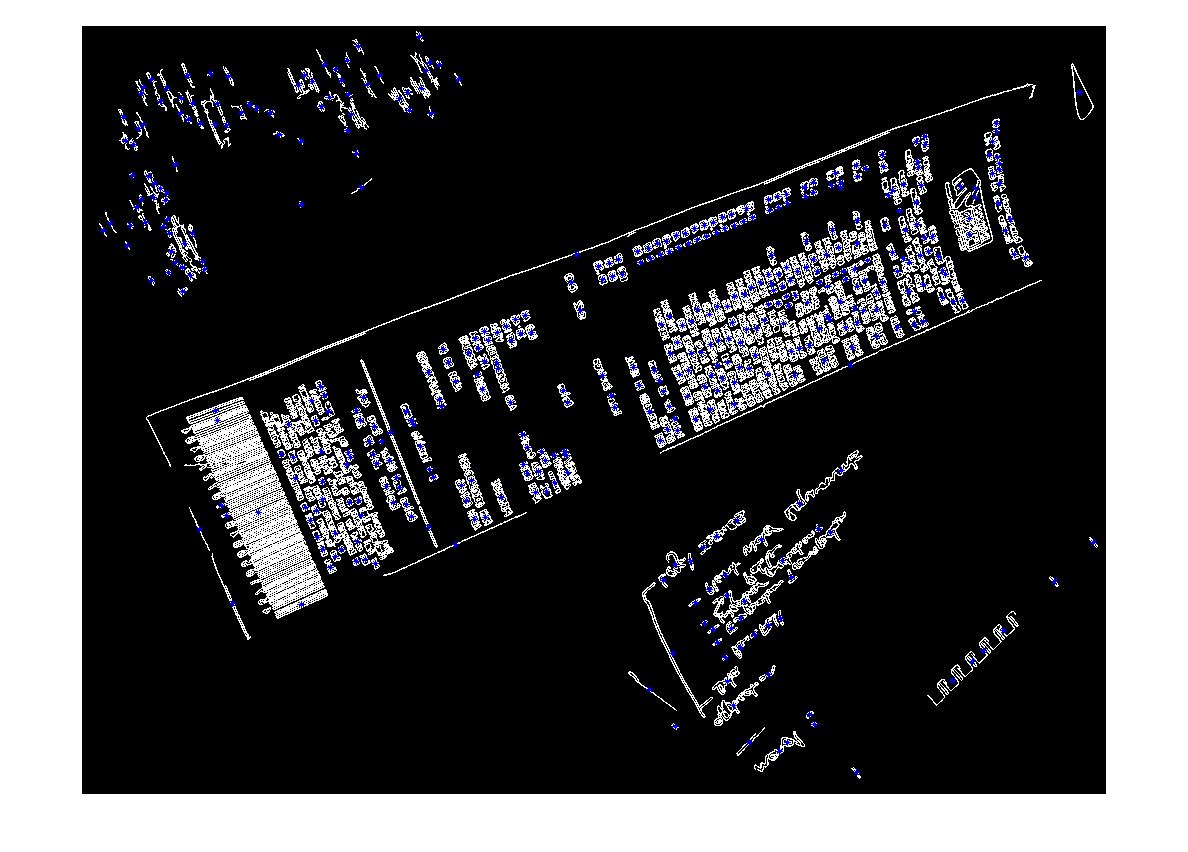

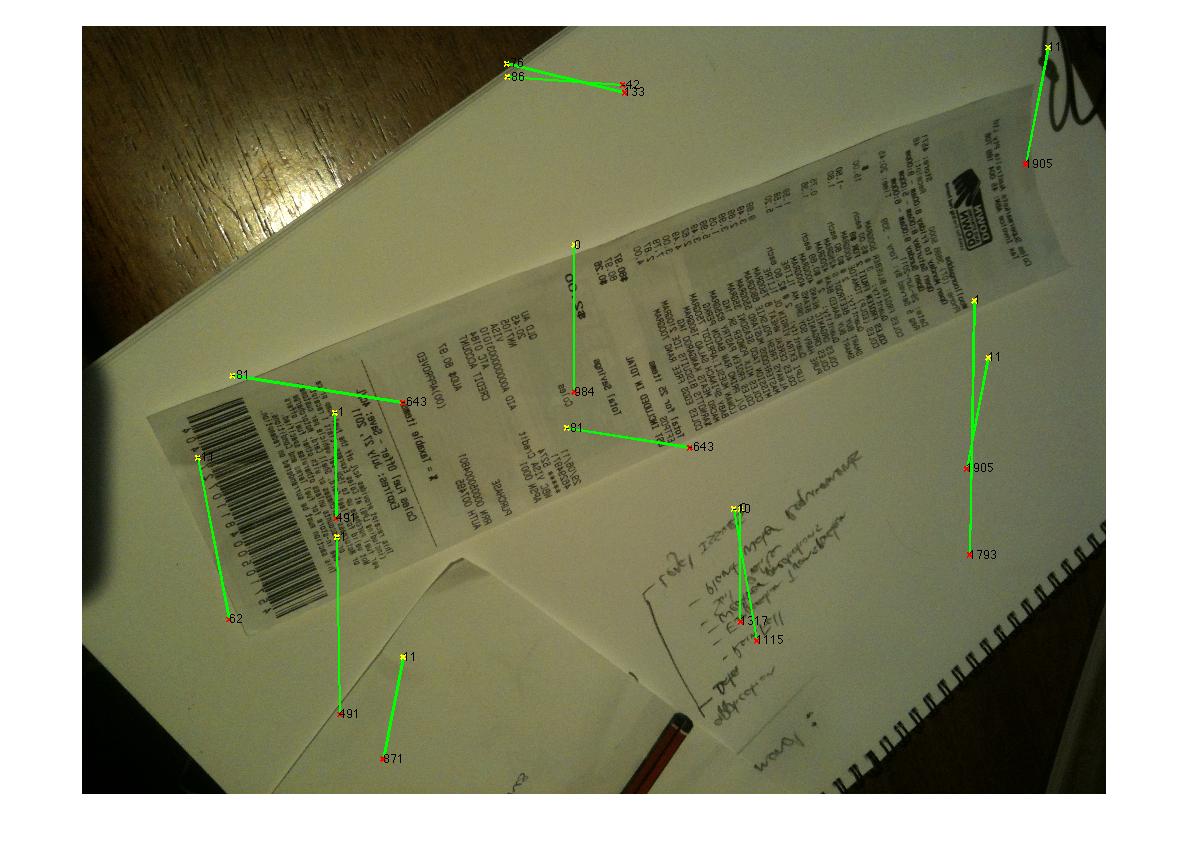

编辑:霍夫变换进度

问:什么算法可以对霍夫线进行聚类以找到角点? 根据答案的建议,我能够使用霍夫变换,选择线条并过滤它们。我目前的方法相当粗糙。我假设发票与图像的偏差始终小于 15 度。如果是这种情况,我最终会得到合理的线条结果(见下文)。但我并不完全确定是否有合适的算法来对线进行聚类(或投票)以推断角点。霍夫线不连续。在噪声图像中,可能存在平行线,因此需要某种形式或距线原点的距离度量。有什么想法吗?

(来源:madteckhead.com)

What is the best way to detect the corners of an invoice/receipt/sheet-of-paper in a photo? This is to be used for subsequent perspective correction, before OCR.

My current approach has been:

RGB > Gray > Canny Edge Detection with thresholding > Dilate(1) > Remove small objects(6) > clear boarder objects > pick larges blog based on Convex Area. > [corner detection - Not implemented]

I can't help but think there must be a more robust 'intelligent'/statistical approach to handle this type of segmentation. I don't have a lot of training examples, but I could probably get 100 images together.

Broader context:

I'm using matlab to prototype, and planning to implement the system in OpenCV and Tesserect-OCR. This is the first of a number of image processing problems I need to solve for this specific application. So I'm looking to roll my own solution and re-familiarize myself with image processing algorithms.

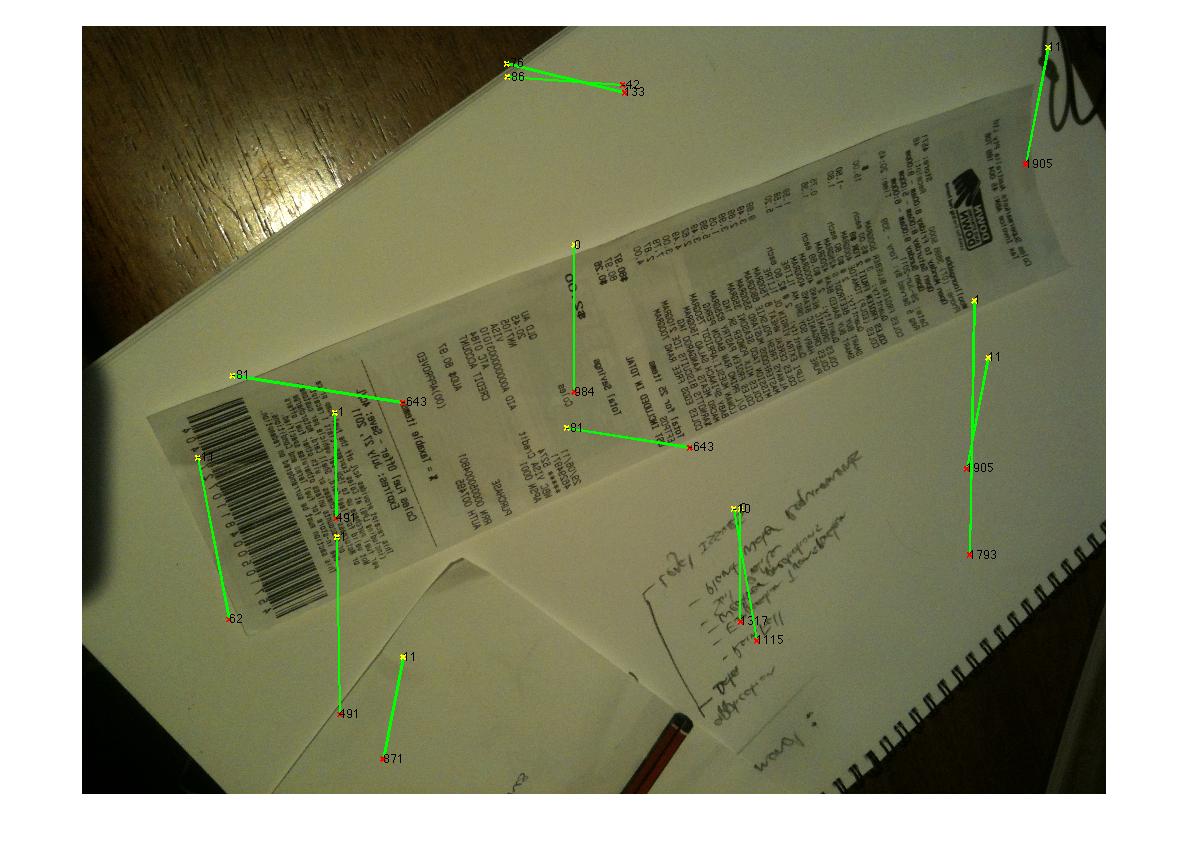

Here are some sample image that I'd like the algorithm to handle: If you'd like to take up the challenge the large images are at http://madteckhead.com/tmp

(source: madteckhead.com)

(source: madteckhead.com)

(source: madteckhead.com)

(source: madteckhead.com)

In the best case this gives:

(source: madteckhead.com)

(source: madteckhead.com)

(source: madteckhead.com)

However it fails easily on other cases:

(source: madteckhead.com)

(source: madteckhead.com)

(source: madteckhead.com)

EDIT: Hough Transform Progress

Q: What algorithm would cluster the hough lines to find corners?

Following advice from answers I was able to use the Hough Transform, pick lines, and filter them. My current approach is rather crude. I've made the assumption the invoice will always be less than 15deg out of alignment with the image. I end up with reasonable results for lines if this is the case (see below). But am not entirely sure of a suitable algorithm to cluster the lines (or vote) to extrapolate for the corners. The Hough lines are not continuous. And in the noisy images, there can be parallel lines so some form or distance from line origin metrics are required. Any ideas?

(source: madteckhead.com)

如果你对这篇内容有疑问,欢迎到本站社区发帖提问 参与讨论,获取更多帮助,或者扫码二维码加入 Web 技术交流群。

绑定邮箱获取回复消息

由于您还没有绑定你的真实邮箱,如果其他用户或者作者回复了您的评论,将不能在第一时间通知您!

发布评论

评论(8)

我是马丁的朋友,今年早些时候他正在研究这个问题。这是我的第一个编码项目,有点仓促,所以代码需要一些错误......解码......

我将从我已经看到的你所做的事情中给出一些提示,然后在明天休息时对我的代码进行排序。

第一个提示,

OpenCV和python非常棒,尽快转向它们。 :D不是去除小物体和/或噪音,而是降低精明的限制,这样它接受更多的边缘,然后找到最大的闭合轮廓(在 OpenCV 中使用

findcontour()和一些简单的参数,我认为我使用了CV_RETR_LIST)。当它在一张白纸上时可能仍然很困难,但绝对提供了最好的结果。对于

Houghline2()变换,尝试使用CV_HOUGH_STANDARD而不是CV_HOUGH_PROBABILISTIC,它会给出 rho和 theta 值,在极坐标中定义直线,然后您可以在一定的容差范围内对直线进行分组。我的分组用作查找表,对于霍夫变换输出的每一行,它都会给出 rho 和 theta 对。如果这些值在表中一对值的 5% 之内,则它们将被丢弃;如果它们在 5% 之外,则将新条目添加到表中。

然后,您可以更轻松地分析平行线或线之间的距离。

希望这有帮助。

I'm Martin's friend who was working on this earlier this year. This was my first ever coding project, and kinda ended in a bit of a rush, so the code needs some errr...decoding...

I'll give a few tips from what I've seen you doing already, and then sort my code on my day off tomorrow.

First tip,

OpenCVandpythonare awesome, move to them as soon as possible. :DInstead of removing small objects and or noise, lower the canny restraints, so it accepts more edges, and then find the largest closed contour (in OpenCV use

findcontour()with some simple parameters, I think I usedCV_RETR_LIST). might still struggle when it's on a white piece of paper, but was definitely providing best results.For the

Houghline2()Transform, try with theCV_HOUGH_STANDARDas opposed to theCV_HOUGH_PROBABILISTIC, it'll give rho and theta values, defining the line in polar coordinates, and then you can group the lines within a certain tolerance to those.My grouping worked as a look up table, for each line outputted from the hough transform it would give a rho and theta pair. If these values were within, say 5% of a pair of values in the table, they were discarded, if they were outside that 5%, a new entry was added to the table.

You can then do analysis of parallel lines or distance between lines much more easily.

Hope this helps.

这是我经过一番实验后得出的结论:

并不完美,但至少适用于所有示例:

Here's what I came up with after a bit of experimentation:

Not perfect, but at least works for all samples:

我大学的一个学生小组最近演示了他们编写的一个 iPhone 应用程序(和 python OpenCV 应用程序)就是为了做到这一点。我记得,步骤是这样的:

这似乎工作得相当好,他们能够拍摄一张纸或一本书的照片,执行角点检测,然后几乎实时地将图像中的文档映射到平面上(有一个 OpenCV 函数可以执行映射)。当我看到它工作时,没有 OCR。

A student group at my university recently demonstrated an iPhone app (and python OpenCV app) that they'd written to do exactly this. As I remember, the steps were something like this:

This seemed to work fairly well and they were able to take a photo of a piece of paper or book, perform the corner detection and then map the document in the image onto a flat plane in almost realtime (there was a single OpenCV function to perform the mapping). There was no OCR when I saw it working.

您可以使用角点检测,而不是从边缘检测开始。

Marvin Framework 为此提供了 Moravec 算法的实现。您可以找到纸张的角作为起点。 Moravec 算法的输出如下:

Instead of starting from edge detection you could use Corner detection.

Marvin Framework provides an implementation of Moravec algorithm for this purpose. You could find the corners of the papers as a starting point. Below the output of Moravec's algorithm:

您也可以使用 MSER (最大稳定极值区域) Sobel 算子的结果是找到图像的稳定区域。对于 MSER 返回的每个区域,您可以应用凸包和多边形逼近来获得如下所示的结果:

但是这种检测对于多张图片的实时检测非常有用,而单张图片并不总是返回最佳结果。

Also you can use MSER (Maximally stable extremal regions) over Sobel operator result to find the stable regions of the image. For each region returned by MSER you can apply convex hull and poly approximation to obtain some like this:

But this kind of detection is useful for live detection more than a single picture that not always return the best result.

边缘检测后,使用霍夫变换。

然后,将这些点与它们的标签一起放入SVM(支持向量机)中,如果示例上有平滑的线条,SVM将没有任何困难来划分示例的必要部分和其他部分。我对 SVM 的建议是设置一个参数,比如连接性和长度。也就是说,如果点相连并且很长,它们很可能是收据的一条线。然后,您可以消除所有其他点。

After edge-detection, use Hough Transform.

Then, put those points in an SVM(supporting vector machine) with their labels, if the examples have smooth lines on them, SVM will not have any difficulty to divide the necessary parts of the example and other parts. My advice on SVM, put a parameter like connectivity and length. That is, if points are connected and long, they are likely to be a line of the receipt. Then, you can eliminate all of the other points.

这里有 @Vanuan 使用 C++ 的代码:

Here you have @Vanuan 's code using C++:

转换为实验室空间

使用 kmeans 段 2 簇

Convert to lab space

Use kmeans segment 2 cluster