Twitter 图像编码挑战

如果一张图片相当于 1000 个单词,那么 140 个字符可以容纳多少图片?

注意:就是这样! 赏金截止日期到了,经过一番深思熟虑,我决定 Boojum 的条目 勉强击败了 Sam Hocevar 的。 一旦我有机会写下来,我将发布更详细的笔记。 当然,大家也可以继续提交解决方案,改进解决方案供人们投票。 感谢所有提交和参赛的人; 我很喜欢它们。 这对我来说跑步很有趣,我希望参赛者和观众都感到有趣。

我遇到了这篇关于尝试压缩图像的有趣帖子进入 Twitter 评论,并且该线程中有很多人(以及 线程Reddit)提供了有关不同方法的建议。 所以,我认为这将是一个很好的编码挑战; 让人们言出必行,并展示他们关于编码的想法如何在您可用的有限空间中带来更多细节。

我挑战你想出一个通用系统,将图像编码为 140 个字符的 Twitter 消息,然后再次将它们解码为图像。 您可以使用 Unicode 字符,因此每个字符可以获得超过 8 位。 然而,即使允许使用 Unicode 字符,您也需要将图像压缩到非常小的空间中; 这肯定是有损压缩,因此必须对每个结果的外观进行主观判断。

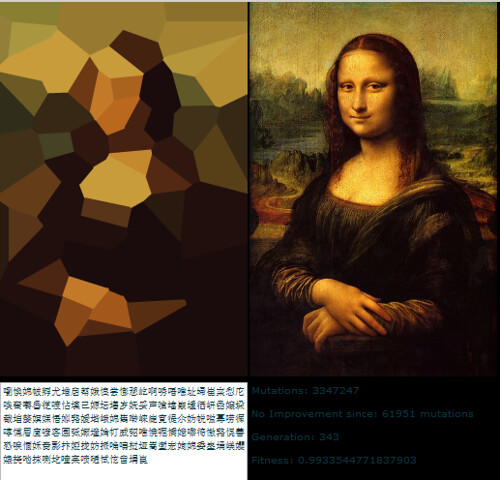

这是原作者 Quasimondo 从他的编码中得到的结果(图片已获得许可根据知识共享署名-非商业许可):

你能做得更好吗?

规则

- 您的程序必须有两种模式:编码和解码。

- 当编码时:

- 您的程序必须将您选择的任何合理的光栅图形格式的图形作为输入。 我们会说 ImageMagick 支持的任何光栅格式都算合理。

- 您的程序必须输出一条可以用 140 个或更少的 Unicode 代码点表示的消息;

U+0000–U+10FFFF范围内的 140 个代码点,不包括非字符(U+FFFE、U+FFFF、U+nFFFE、U+nFFFF其中 n 是1–10十六进制,范围U+FDD0–U+FDEF)和代理代码点(U+D800–U+DFFF)。 它可以以您选择的任何合理的编码输出; GNUiconv支持的任何编码都将被视为合理,并且您的平台本机编码或区域设置编码可能是一个不错的选择。 有关更多详细信息,请参阅下面的 Unicode 注释。

- 解码时:

- 您的程序应将编码模式的输出作为输入。

- 您的程序必须以您选择的任何合理格式(如上所述)输出图像,不过输出矢量格式也可以。

- 图像输出应该是输入图像的近似值; 与输入图像越接近越好。

- 除了上面指定的输出之外,解码过程可能无法访问编码过程的任何其他输出; 也就是说,您无法将图像上传到某处并输出用于解码过程下载的 URL,或者类似的愚蠢行为。

为了用户界面的一致性,您的程序的行为必须如下:

- 您的程序必须是可设置为在具有适当解释器的平台上可执行的脚本,或者是可编译为可执行文件的程序。

- 您的程序必须将

encode或decode作为其第一个参数来设置模式。 您的程序必须通过以下一种或多种方式获取输入(如果您实现采用文件名的方式,如果文件名丢失,您还可以从 stdin 和 stdout 读取和写入):

从标准输入获取输入并在标准输出上生成输出。

my-program 编码output.txt 我的程序解码 output.png 从第二个参数中指定的文件中获取输入,并在第三个参数中指定的文件中生成输出。

my-program 编码 input.png output.txt 我的程序解码output.txt output.png

- 对于您的解决方案,请发布:

- 您的完整代码和/或托管在其他地方的代码链接(如果代码很长,或者需要编译许多文件,等等)。

- 解释其工作原理(如果从代码中看不出来,或者代码很长并且人们会对摘要感兴趣)。

- 示例图像,包含原始图像、压缩后的文本以及解码后的图像。

- 如果您的想法是基于别人的想法,请注明出处。 尝试改进别人的想法是可以的,但你必须归因于他们。

指导原则

这些基本上是可能被打破的规则、建议或评分标准:

- 美观很重要。 我将根据以下因素进行判断,并建议其他人进行判断:

- 输出图像的外观效果如何,与原始图像的相似度如何。

- 文字看起来多漂亮啊。 如果你有一个非常聪明的压缩方案,完全随机的官方书籍是可以的,但我也想看到将图像变成多语言诗歌的答案,或者类似的聪明的东西。 请注意,原始解决方案的作者决定仅使用中文字符,因为这样看起来更好。

- 有趣的代码和聪明的算法总是好的。 我喜欢简短、中肯、清晰的代码,但真正聪明的复杂算法也可以,只要它们能产生好的结果。

- 速度也很重要,尽管不如压缩图像的效果那么重要。 我宁愿拥有一个可以在十分之一秒内转换图像的程序,也不愿拥有连续几天运行遗传算法的程序。

- 与较长的解决方案相比,我更喜欢较短的解决方案,只要它们在质量上具有相当的可比性; 简洁是一种美德。

- 您的程序应该使用可在 Mac OS X、Linux 或 Windows 上免费实现的语言来实现。 我希望能够运行这些程序,但是如果您有一个只能在 MATLAB 下运行的出色解决方案 或者其他什么,那很好。

- 你的程序应该尽可能通用; 它应该适用于尽可能多的不同图像,尽管有些图像可能会比其他图像产生更好的结果。 尤其:

- 在程序中内置一些图像,对其进行匹配和写入引用,然后在解码时生成匹配图像,这是相当蹩脚的,并且只能覆盖一些图像。

- 一个可以获取简单、平面、几何形状的图像并将其分解为某种矢量基元的程序非常漂亮,但如果它在超过一定复杂度的图像上失败,那么它可能不够通用。

- 一个只能拍摄特定固定宽高比的图像但能很好地处理它们的程序也可以,但并不理想。

- 您可能会发现,与彩色图像相比,黑白图像可以在更小的空间中获取更多信息。 另一方面,这可能会限制其适用的图像类型; 黑白面孔的效果很好,但抽象设计可能不太好。

- 如果输出图像小于输入图像,但比例大致相同,那就完全没问题。 如果您必须放大图像以与原始图像进行比较,这也没关系; 重要的是它的外观。

- 你的程序应该产生能够真正通过 Twitter 并毫发无伤地输出的输出。 这只是一个指南,而不是一个规则,因为我找不到任何有关支持的精确字符集的文档,但您可能应该避免控制字符、时髦的隐形组合字符、私人使用字符等。

评分标准

作为在选择我接受的解决方案时如何对解决方案进行排名的一般指南,可以说我可能会在 25 分制上评估解决方案(这是非常粗略的,我不会直接对任何内容进行评分,仅将此作为基本准则):

- 15 分表示编码方案再现各种输入图像的效果。 这是一种主观的审美判断

- 0 意味着它根本不起作用,它每次都会返回相同的图像,或者其他东西

- 5 意味着它可以对一些图像进行编码,尽管解码后的版本看起来很难看,而且它可能根本无法处理更复杂的图像

- 10 表示它适用于各种图像,并生成令人愉悦的图像,有时可能会被区分

- 15 意味着它可以生成某些图像的完美复制品,甚至对于更大、更复杂的图像,也可以提供可识别的内容。 或者,也许它不会产生非常容易识别的图像,但会产生明显源自原始图像的美丽图像。

- 巧妙使用 Unicode 字符集 3 分

- 仅使用整套允许的字符 0 分

- 使用可安全通过 Twitter 或在更广泛的情况下传输的有限字符集可得 1 分

- 使用主题字符子集(例如仅使用汉字表意文字或仅使用从右到左的字符),得 2 分

- 做一些非常巧妙的事情的 3 点,例如生成可读的文本或使用看起来像相关图像的字符

- 3 分用于巧妙的算法方法和代码风格

- 1000 行代码仅用于缩小图像、将其视为每像素 1 位并进行 Base64 编码的内容为 0 分

- 使用标准编码技术且写得好且简短的内容,得 1 分

- 引入相对新颖的编码技术,或者令人惊讶的简短和干净的内容,得 2 分

- 对于实际上产生良好结果的单行,或在图形编码中开辟新天地的东西,给予 3 分(如果这看起来对于开辟新天地来说分数很低,请记住,如此好的结果可能会具有很高的分数)审美得分)

- 在速度方面2 分。 上述标准都比速度 1 点 更重要

- 在其他条件相同的情况下,越快越好,但是对于在自由(开源)软件上运行来说, ,因为我更喜欢自由软件(请注意,C# 仍将是只要它在 Mono 上运行,就有资格获得这一点,同样,如果 MATLAB 代码在 GNU Octave 上运行,它就有资格获得这一点)

- 实际上遵循所有规则1 分。 这些规则已经变得有点大和复杂,所以我可能会接受其他好的答案,但有一个小细节错误,但我会给任何真正遵循所有规则的解决方案加分

参考图片

有些人问过一些参考图像。 以下是一些您可以尝试的参考图像; 较小的版本嵌入此处,如果您需要的话,它们都链接到图像的较大版本:

奖品

我将提供 500 名代表赏金(加上 StackOverflow 启动的 50 名)根据上述标准,找到我最喜欢的解决方案。 当然,我也鼓励其他人在这里投票选出他们最喜欢的解决方案。

关于截止日期的说明

本次比赛将持续到 5 月 30 日星期六下午 6 点左右赏金用完为止。我不能透露比赛结束的确切时间; 可能是下午 5 点到 7 点之间的任何时间。 我保证会查看下午2点之前提交的所有参赛作品,并且我会尽力查看下午4点之前提交的所有参赛作品; 如果在此之后提交解决方案,我可能没有机会在做出决定之前公平地审视它们。 另外,您越早提交,您就越有机会投票来帮助我选择最佳解决方案,因此请尝试尽早提交,而不是在截止日期前提交。

Unicode 注释

对于究竟允许哪些 Unicode 字符也存在一些混乱。 可能的 Unicode 代码点范围为 U+0000 到 U+10FFFF。 在任何开放的数据交换中,有些代码点永远不能用作 Unicode 字符; 这些是非字符和代理代码点。 非字符在 Unidode Standard 5.1.0 第 16.7 节中定义为值 U+FFFE、U+FFFF、U+nFFFE、U+nFFFF 其中 n 是 1–10 十六进制,以及范围U+FDD0–U+FDEF。 这些值旨在用于特定于应用程序的内部使用,并且符合要求的应用程序可能会从它们处理的文本中删除这些字符。 代理代码点,在 Unicode 标准 5.1.0 第 3.8 节中定义如 U+D800–U+DFFF,用于对 UTF-16 中基本多语言平面之外的字符进行编码; 因此,不可能直接用 UTF-16 编码来表示这些码点,并且用任何其他编码对它们进行编码都是无效的。 因此,为了本次比赛的目的,我将允许任何将图像编码为 U+0000–U+10FFFF 范围内不超过 140 个 Unicode 代码点的序列的程序。 code>,排除上面定义的所有非字符和代理对。

我会更喜欢仅使用指定字符的解决方案,甚至更好的解决方案使用指定字符的巧妙子集或对它们使用的字符集做一些有趣的事情。 有关指定字符的列表,请参阅 Unicode 字符数据库; 请注意,有些字符是直接列出的,而有些字符仅作为范围的开头和结尾列出。 另请注意,代理代码点已在数据库中列出,但如上所述是被禁止的。 如果您想利用字符的某些属性使输出的文本更有趣,可以使用各种可用的字符信息数据库,例如命名代码块列表 和各种字符属性。

由于 Twitter 没有指定它们支持的确切字符集,因此我会对实际上不适用于 Twitter 的解决方案持宽容态度,因为某些字符会额外计数或某些字符会被删除。 最好但不要求所有编码输出都应该能够通过 Twitter 或其他微博服务(例如 identi.ca< /a>. 我看过一些文档,指出 Twitter 实体编码 <、> 和 &,因此将它们分别计为 4、4 和 5 个字符,但我自己还没有测试过,而且他们的 JavaScript 字符计数器不这样做似乎不是这样算的。

提示与提示 链接

- 规则中有效 Unicode 字符的定义有点复杂。 选择单个字符块,例如 CJK 统一表意文字 (U+4E00–U+9FCF) 可能会更容易。

- 您可以使用现有的图像库,例如 ImageMagick 或 Python 图像库,用于图像处理。

- 如果您需要一些帮助来了解 Unicode 字符集及其各种编码,请参阅此快速指南 或有关 Linux 和 Unix 中 UTF-8 的详细常见问题解答 。

- 你越早提出解决方案,我(和其他投票的人)就有越多的时间来查看它。 如果您改进了您的解决方案,您可以对其进行编辑; 当我最后一次查看解决方案时,我的赏金将基于最新版本。

- 如果您想要一个简单的图像格式来解析和编写(并且不想只使用现有格式),我建议使用 PPM 格式。 这是一种基于文本的格式,非常易于使用,您可以使用 ImageMagick 与其进行转换。

If a picture's worth 1000 words, how much of a picture can you fit in 140 characters?

Note: That's it folks! Bounty deadline is here, and after some tough deliberation, I have decided that Boojum's entry just barely edged out Sam Hocevar's. I will post more detailed notes once I've had a chance to write them up. Of course, everyone should feel free to continue to submit solutions and improve solutions for people to vote on. Thank you to everyone who submitted and entry; I enjoyed all of them. This has been a lot of fun for me to run, and I hope it's been fun for both the entrants and the spectators.

I came across this interesting post about trying to compress images into a Twitter comment, and lots of people in that thread (and a thread on Reddit) had suggestions about different ways you could do it. So, I figure it would make a good coding challenge; let people put their money where their mouth is, and show how their ideas about encoding can lead to more detail in the limited space that you have available.

I challenge you to come up with a general purpose system for encoding images into 140 character Twitter messages, and decoding them into an image again. You can use Unicode characters, so you get more than 8 bits per character. Even allowing for Unicode characters, however, you will need to compress images into a very small amount of space; this will certainly be a lossy compression, and so there will have to be subjective judgements about how good each result looks.

Here is the result that the original author, Quasimondo, got from his encoding (image is licensed under a Creative Commons Attribution-Noncommercial license):

Can you do better?

Rules

- Your program must have two modes: encoding and decoding.

- When encoding:

- Your program must take as input a graphic in any reasonable raster graphic format of your choice. We'll say that any raster format supported by ImageMagick counts as reasonable.

- Your program must output a message which can be represented in 140 or fewer Unicode code points; 140 code points in the range

U+0000–U+10FFFF, excluding non-characters (U+FFFE,U+FFFF,U+nFFFE,U+nFFFFwhere n is1–10hexadecimal, and the rangeU+FDD0–U+FDEF) and surrogate code points (U+D800–U+DFFF). It may be output in any reasonable encoding of your choice; any encoding supported by GNUiconvwill be considered reasonable, and your platform native encoding or locale encoding would likely be a good choice. See Unicode notes below for more details.

- When decoding:

- Your program should take as input the output of your encoding mode.

- Your program must output an image in any reasonable format of your choice, as defined above, though for output vector formats are OK as well.

- The image output should be an approximation of the input image; the closer you can get to the input image, the better.

- The decoding process may have no access to any other output of the encoding process other than the output specified above; that is, you can't upload the image somewhere and output the URL for the decoding process to download, or anything silly like that.

For the sake of consistency in user interface, your program must behave as follows:

- Your program must be a script that can be set to executable on a platform with the appropriate interpreter, or a program that can be compiled into an executable.

- Your program must take as its first argument either

encodeordecodeto set the mode. Your program must take input in one or more of the following ways (if you implement the one that takes file names, you may also read and write from stdin and stdout if file names are missing):

Take input from standard in and produce output on standard out.

my-program encode <input.png >output.txt my-program decode <output.txt >output.pngTake input from a file named in the second argument, and produce output in the file named in the third.

my-program encode input.png output.txt my-program decode output.txt output.png

- For your solution, please post:

- Your code, in full, and/or a link to it hosted elsewhere (if it's very long, or requires many files to compile, or something).

- An explanation of how it works, if it's not immediately obvious from the code or if the code is long and people will be interested in a summary.

- An example image, with the original image, the text it compresses down to, and the decoded image.

- If you are building on an idea that someone else had, please attribute them. It's OK to try to do a refinement of someone else's idea, but you must attribute them.

Guidelines

These are basically rules that may be broken, suggestions, or scoring criteria:

- Aesthetics are important. I'll be judging, and suggest that other people judge, based on:

- How good the output image looks, and how much it looks like the original.

- How nice the text looks. Completely random gobbledigook is OK if you have a really clever compression scheme, but I also want to see answers that turn images into mutli-lingual poems, or something clever like that. Note that the author of the original solution decided to use only Chinese characters, since it looked nicer that way.

- Interesting code and clever algorithms are always good. I like short, to the point, and clear code, but really clever complicated algorithms are OK too as long as they produce good results.

- Speed is also important, though not as important as how good a job compressing the image you do. I'd rather have a program that can convert an image in a tenth of a second than something that will be running genetic algorithms for days on end.

- I will prefer shorter solutions to longer ones, as long as they are reasonably comparable in quality; conciseness is a virtue.

- Your program should be implemented in a language that has a freely-available implementation on Mac OS X, Linux, or Windows. I'd like to be able to run the programs, but if you have a great solution that only runs under MATLAB or something, that's fine.

- Your program should be as general as possible; it should work for as many different images as possible, though some may produce better results than others. In particular:

- Having a few images built into the program that it matches and writes a reference to, and then produces the matching image upon decoding, is fairly lame and will only cover a few images.

- A program that can take images of simple, flat, geometric shapes and decompose them into some vector primitive is pretty nifty, but if it fails on images beyond a certain complexity it is probably insufficiently general.

- A program that can only take images of a particular fixed aspect ratio but does a good job with them would also be OK, but not ideal.

- You may find that a black and white image can get more information into a smaller space than a color image. On the other hand, that may limit the types of image it's applicable to; faces come out fine in black and white, but abstract designs may not fare so well.

- It is perfectly fine if the output image is smaller than the input, while being roughly the same proportion. It's OK if you have to scale the image up to compare it to the original; what's important is how it looks.

- Your program should produce output that could actually go through Twitter and come out unscathed. This is only a guideline rather than a rule, since I couldn't find any documentation on the precise set of characters supported, but you should probably avoid control characters, funky invisible combining characters, private use characters, and the like.

Scoring rubric

As a general guide to how I will be ranking solutions when choosing my accepted solution, lets say that I'll probably be evaluating solutions on a 25 point scale (this is very rough, and I won't be scoring anything directly, just using this as a basic guideline):

- 15 points for how well the encoding scheme reproduces a wide range of input images. This is a subjective, aesthetic judgement

- 0 means that it doesn't work at all, it gives the same image back every time, or something

- 5 means that it can encode a few images, though the decoded version looks ugly and it may not work at all on more complicated images

- 10 means that it works on a wide range of images, and produces pleasant looking images which may occasionally be distinguishable

- 15 means that it produces perfect replicas of some images, and even for larger and more complex images, gives something that is recognizable. Or, perhaps it does not make images that are quite recognizable, but produces beautiful images that are clearly derived from the original.

- 3 points for clever use of the Unicode character set

- 0 points for simply using the entire set of allowed characters

- 1 point for using a limited set of characters that are safe for transfer over Twitter or in a wider variety of situations

- 2 points for using a thematic subset of characters, such as only Han ideographs or only right-to-left characters

- 3 points for doing something really neat, like generating readable text or using characters that look like the image in question

- 3 points for clever algorithmic approaches and code style

- 0 points for something that is 1000 lines of code only to scale the image down, treat it as 1 bit per pixel, and base64 encode that

- 1 point for something that uses a standard encoding technique and is well written and brief

- 2 points for something that introduces a relatively novel encoding technique, or that is surprisingly short and clean

- 3 points for a one liner that actually produces good results, or something that breaks new ground in graphics encoding (if this seems like a low number of points for breaking new ground, remember that a result this good will likely have a high score for aesthetics as well)

- 2 points for speed. All else being equal, faster is better, but the above criteria are all more important than speed

- 1 point for running on free (open source) software, because I prefer free software (note that C# will still be eligible for this point as long as it runs on Mono, likewise MATLAB code would be eligible if it runs on GNU Octave)

- 1 point for actually following all of the rules. These rules have gotten a bit big and complicated, so I'll probably accept otherwise good answers that get one small detail wrong, but I will give an extra point to any solution that does actually follow all of the rules

Reference images

Some folks have asked for some reference images. Here are a few reference images that you can try; smaller versions are embedded here, they all link to larger versions of the image if you need those:

Prize

I am offering a 500 rep bounty (plus the 50 that StackOverflow kicks in) for the solution that I like the best, based on the above criteria. Of course, I encourage everyone else to vote on their favorite solutions here as well.

Note on deadline

This contest will run until the bounty runs out, about 6 PM on Saturday, May 30. I can't say the precise time it will end; it may be anywhere from 5 to 7 PM. I will guarantee that I'll look at all entries submitted by 2 PM, and I will do my best to look at all entries submitted by 4 PM; if solutions are submitted after that, I may not have a chance to give them a fair look before I have to make my decision. Also, the earlier you submit, the more chance you will have for voting to be able to help me pick the best solution, so try and submit earlier rather than right at the deadline.

Unicode notes

There has also been some confusion on exactly what Unicode characters are allowed. The range of possible Unicode code points is U+0000 to U+10FFFF. There are some code points which are never valid to use as Unicode characters in any open interchange of data; these are the noncharacters and the surrogate code points. Noncharacters are defined in the Unidode Standard 5.1.0 section 16.7 as the values U+FFFE, U+FFFF, U+nFFFE, U+nFFFF where n is 1–10 hexadecimal, and the range U+FDD0–U+FDEF. These values are intended to be used for application-specific internal usage, and conforming applications may strip these characters out of text processed by them. Surrogate code points, defined in the Unicode Standard 5.1.0 section 3.8 as U+D800–U+DFFF, are used for encoding characters beyond the Basic Multilingual Plane in UTF-16; thus, it is impossible to represent these code points directly in the UTF-16 encoding, and it is invalid to encode them in any other encoding. Thus, for the purpose of this contest, I will allow any program which encodes images into a sequence of no more than 140 Unicode code points from the range U+0000–U+10FFFF, excluding all noncharacters and surrogate pairs as defined above.

I will prefer solutions that use only assigned characters, and even better ones that use clever subsets of assigned characters or do something interesting with the character set they use. For a list of assigned characters, see the Unicode Character Database; note that some characters are listed directly, while some are listed only as the start and end of a range. Also note that surrogate code points are listed in the database, but forbidden as mentioned above. If you would like to take advantage of certain properties of characters for making the text you output more interesting, there are a variety of databases of character information available, such as a list of named code blocks and various character properties.

Since Twitter does not specify the exact character set they support, I will be lenient about solutions which do not actually work with Twitter because certain characters count extra or certain characters are stripped. It is preferred but not required that all encoded outputs should be able to be transferred unharmed via Twitter or another microblogging service such as identi.ca. I have seen some documentation stating that Twitter entity-encodes <, >, and &, and thus counts those as 4, 4, and 5 characters respectively, but I have not tested that out myself, and their JavaScript character counter doesn't seem to count them that way.

Tips & Links

- The definition of valid Unicode characters in the rules is a bit complicated. Choosing a single block of characters, such as CJK Unified Ideographs (U+4E00–U+9FCF) may be easier.

- You may use existing image libraries, like ImageMagick or Python Imaging Library, for your image manipulation.

- If you need some help understanding the Unicode character set and its various encodings, see this quick guide or this detailed FAQ on UTF-8 in Linux and Unix.

- The earlier you get your solution in, the more time I (and other people voting) will have to look at it. You can edit your solution if you improve it; I'll base my bounty on the most recent version when I take my last look through the solutions.

- If you want an easy image format to parse and write (and don't want to just use an existing format), I'd suggest using the PPM format. It's a text based format that's very easy to work with, and you can use ImageMagick to convert to and from it.

如果你对这篇内容有疑问,欢迎到本站社区发帖提问 参与讨论,获取更多帮助,或者扫码二维码加入 Web 技术交流群。

绑定邮箱获取回复消息

由于您还没有绑定你的真实邮箱,如果其他用户或者作者回复了您的评论,将不能在第一时间通知您!

发布评论

评论(15)

图像文件和 python 源(版本 1 和 2)

版本 1

这是我的第一次尝试。 我会随时更新。

我已经将 SO 徽标减少到 300 个字符,几乎无损。 我的技术使用转换为 SVG 矢量艺术,因此它在线条艺术上效果最好。 它实际上是一个SVG压缩器,它仍然需要原始艺术经过矢量化阶段。

对于我的第一次尝试,我使用了 在线服务 来进行 PNG 跟踪,但是有许多免费和非免费工具可以处理这部分,包括 potrace(开源)。

以下是结果

原始 SO 徽标 http://www.warriorhut.org/graphics/svg_to_unicode/so-logo .png 原图

解码后的 SO 徽标 http://www.warriorhut.org/graphics/svg_to_unicode/so-logo-decoded .png 编码和解码后

字符:300

时间:未测量,但实际上是即时的(不包括矢量化/光栅化步骤)

下一阶段将嵌入 4每个 unicode 字符的符号(SVG 路径点和命令)。 目前我的 python 版本不支持 UCS4 宽字符,这限制了每个字符的分辨率。 我还将最大范围限制为 unicode 保留范围 0xD800 的下端,但是一旦我构建了允许的字符列表和过滤器来避免它们,理论上我可以将所需的字符数推低至 70-100上面的标志。

目前该方法的局限性是输出大小不固定。 它取决于矢量化后的矢量节点/点的数量。 自动化此限制将需要对图像进行像素化(这会消除矢量的主要优点)或通过简化阶段重复运行路径,直到达到所需的节点数(我目前正在 Inkscape 中手动执行此操作)。

版本 2

更新:v2 现在有资格参加比赛。 更改:

每丢弃 4 位颜色数据

颜色,然后通过十六进制转换将其打包成字符。

字符:133

时间:几秒

v2 解码 http://www.warriorhut.org/graphics/svg_to_unicode/so-logo-decoded-v2.png 编码和解码后(版本 2)

如您所见这次有一些文物。 这不是方法的限制,而是我的转换中的某个错误。 当点超出 0.0 - 127.0 范围时,就会出现伪影,而我限制它们的尝试却取得了不同程度的成功。 解决方案只是缩小图像,但是我在缩放实际点而不是画板或组矩阵时遇到了麻烦,而且我现在太累了,无法关心。 简而言之,如果您的积分在支持的范围内,那么通常是有效的。

我相信中间的扭结是由于手柄移动到与其相连的手柄的另一侧造成的。 基本上,这些点一开始就太接近了。 在压缩源图像之前对其运行简化过滤器应该可以解决此问题并删除一些不必要的字符。

更新:

这种方法适用于简单的对象,因此我需要一种方法来简化复杂的路径并减少噪音。 我使用 Inkscape 来完成此任务。 我很幸运地使用 Inkscape 清理了不必要的路径,但没有时间尝试自动化它。 我使用 Inkscape 的“简化”功能制作了一些示例 svgs 来减少路径数量。

简化工作正常,但路径太多可能会很慢。

自动跟踪示例 http://www.warriorhut.org/graphics/svg_to_unicode/autotrace_16_color_manual_reduction.png 康奈尔盒子 http://www.warriorhut.com/graphics/svg_to_unicode/cornell_box_simplified.png 莉娜http://www.warriorhut.com/graphics/svg_to_unicode/lena_std_washed_autotrace.png

跟踪的缩略图 http://www.warriorhut.org/graphics/svg_to_unicode/competition_thumbnails_autotrace.png

这里有一些超低分辨率的镜头。 尽管可能还需要一些巧妙的路径压缩,但它们更接近 140 个字符的限制。

修饰 http://www.warriorhut.org/graphics/svg_to_unicode/competition_thumbnails_groomed.png

简化且去斑。

三角化 http://www.warriorhut.org/graphics/svg_to_unicode/competition_thumbnails_triangulated.png

简化、去斑和三角化。

上图:使用 autotrace 简化的路径。

不幸的是,我的解析器无法处理自动跟踪输出,因此我不知道使用了多少个点或要简化到什么程度,遗憾的是在截止日期之前几乎没有时间编写它。 不过,它比 inkscape 输出更容易解析。

image files and python source (version 1 and 2)

Version 1

Here is my first attempt. I will update as I go.

I have got the SO logo down to 300 characters almost lossless. My technique uses conversion to SVG vector art so it works best on line art. It is actually an SVG compressor, it still requires the original art go through a vectorisation stage.

For my first attempt I used an online service for the PNG trace however there are MANY free and non-free tools that can handle this part including potrace (open-source).

Here are the results

Original SO Logo http://www.warriorhut.org/graphics/svg_to_unicode/so-logo.png Original

Decoded SO Logo http://www.warriorhut.org/graphics/svg_to_unicode/so-logo-decoded.png After encoding and decoding

Characters: 300

Time: Not measured but practically instant (not including vectorisation/rasterisation steps)

The next stage will be to embed 4 symbols (SVG path points and commands) per unicode character. At the moment my python build does not have wide character support UCS4 which limits my resolution per character. I've also limited the maximum range to the lower end of the unicode reserved range 0xD800 however once I build a list of allowed characters and a filter to avoid them I can theoretically push the required number of characters as low as 70-100 for the logo above.

A limitation of this method at present is the output size is not fixed. It depends on number of vector nodes/points after vectorisation. Automating this limit will require either pixelating the image (which removes the main benefit of vectors) or repeated running the paths through a simplification stage until the desired node count is reached (which I'm currently doing manually in Inkscape).

Version 2

UPDATE: v2 is now qualified to compete. Changes:

throwing away 4bits of color data per

color then packing it into a character via hex conversion.

Characters: 133

Time: A few seconds

v2 decoded http://www.warriorhut.org/graphics/svg_to_unicode/so-logo-decoded-v2.png After encoding and decoding (version 2)

As you can see there are some artifacts this time. It isn't a limitation of the method but a mistake somewhere in my conversions. The artifacts happen when the points go outside the range 0.0 - 127.0 and my attempts to constrain them have had mixed success. The solution is simply to scale the image down however I had trouble scaling the actual points rather than the artboard or group matrix and I'm too tired now to care. In short, if your points are in the supported range it generally works.

I believe the kink in the middle is due to a handle moving to the other side of a handle it's linked to. Basically the points are too close together in the first place. Running a simplify filter over the source image in advance of compressing it should fix this and shave of some unnecessary characters.

UPDATE:

This method is fine for simple objects so I needed a way to simplify complex paths and reduce noise. I used Inkscape for this task. I've had some luck with grooming out unnecessary paths using Inkscape but not had time to try automating it. I've made some sample svgs using the Inkscape 'Simplify' function to reduce the number of paths.

Simplify works ok but it can be slow with this many paths.

autotrace example http://www.warriorhut.org/graphics/svg_to_unicode/autotrace_16_color_manual_reduction.png cornell box http://www.warriorhut.com/graphics/svg_to_unicode/cornell_box_simplified.png lena http://www.warriorhut.com/graphics/svg_to_unicode/lena_std_washed_autotrace.png

thumbnails traced http://www.warriorhut.org/graphics/svg_to_unicode/competition_thumbnails_autotrace.png

Here's some ultra low-res shots. These would be closer to the 140 character limit though some clever path compression may be need as well.

groomed http://www.warriorhut.org/graphics/svg_to_unicode/competition_thumbnails_groomed.png

Simplified and despeckled.

trianglulated http://www.warriorhut.org/graphics/svg_to_unicode/competition_thumbnails_triangulated.png

Simplified, despeckled and triangulated.

ABOVE: Simplified paths using autotrace.

Unfortunately my parser doesn't handle the autotrace output so I don't know how may points are in use or how far to simplify, sadly there's little time for writing it before the deadline. It's much easier to parse than the inkscape output though.

好吧,这是我的: nanocrunch.cpp 和 CMakeLists.txt 文件以使用 CMake 构建它。< /a> 它依赖 Magick++ ImageMagick API 进行大部分图像处理。 它还需要 GMP 库来进行字符串编码的 bignum 算术。

我的解决方案基于分形图像压缩,并进行了一些独特的改进。 基本思想是获取图像,将副本缩小到 50%,并寻找各种方向的、看起来与原始图像中不重叠的块相似的块。 此搜索需要非常强力的方法,但这只会使介绍我的修改变得更容易。

第一个修改是,我的程序不仅考虑 90 度旋转和翻转,还考虑 45 度方向。 每块多一位,但对图像质量有很大帮助。

另一件事是,为每个块的每个颜色分量存储对比度/亮度调整过于昂贵。 相反,我存储了高度量化的颜色(调色板只有 4 * 4 * 4 = 64 种颜色),它只是以某种比例混合。 从数学上讲,这相当于对每种颜色进行可变亮度和恒定对比度调整。 不幸的是,这也意味着没有负对比度来翻转颜色。

一旦计算出每个块的位置、方向和颜色,它就会将其编码为 UTF-8 字符串。 首先,它生成一个非常大的bignum来表示块表中的数据和图像大小。 解决这个问题的方法类似于 Sam Hocevar 的解决方案——一种基数随位置而变化的大数。

然后它将其转换为可用字符集大小的基数。 默认情况下,它充分利用分配的 unicode 字符集,减去小于、大于、与号、控制、组合以及代理和私有字符。 它不漂亮,但很有效。 您还可以注释掉默认表并选择可打印的 7 位 ASCII(同样不包括 <、> 和 & 字符)或 CJK 统一表意文字。 可用字符代码的表存储有交替运行的无效和有效字符的运行长度编码。

无论如何,这里有一些图像和时间(在我的旧 3.0GHz P4 上测量),并在上述完整分配的 unicode 集中压缩为 140 个字符。 总的来说,我对他们的结果相当满意。 如果我有更多的时间来处理这个问题,我可能会尝试减少解压缩图像的块状现象。 尽管如此,我认为对于极端压缩比来说,结果还是相当不错的。 解压缩后的图像有点印象派,但我发现比较容易看出位与原始图像的对应关系。

Stack Overflow 徽标(编码 8.6 秒,解码 7.9 秒,485 字节):

http://i44.tinypic.com/2w7lok1.png

http://i44.tinypic.com/2w7lok1.png

Lena(编码 32.8 秒,解码 13.0 秒,477 字节):

http://i42.tinypic.com/2rr49wg.png http://i40.tinypic.com/2rhxxyu.png

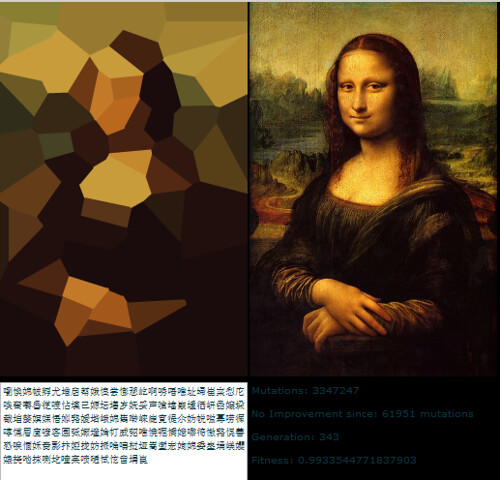

蒙娜丽莎(编码 43.2 秒,解码 14.5 秒,490字节):

http://i41.tinypic.com/ekgwp3.png http://i43.tinypic.com/ngsxep.png

编辑:CJK 统一字符

Sam 问道在关于将其与 CJK 一起使用的评论中。 以下是根据 CJK 统一字符集压缩为 139 个字符的《蒙娜丽莎》版本:

http://i43。 tinypic.com/2yxgdfk.png

咏璘驞凄脒鵚据蛥鸂拗朐朖辿韩潴突发歪突发栘璯緍翁蕜抱揎频蓼债鑡嗞靊孤独柮嚛嚵籥聚隤慛絖铨馿渫櫰矍昀鰛掾撄粂敽牙粳擎蔍螎葙峬覧绌蹔抆糜冧筇哜搀沄芯譶辍浍垝黟偞媄童竽梀韠镰猳閺狌而膻喙伆杇婣惧鐤諽鷍鸮駫抢毤埙悖萜愿旖鞰萗勹鈱哳垬濅鬒秀瞛洆认気狋异闼籴珵仾轿熜謋繴茴晋髭杍嚖熥勋縿饼珝爸擸萿

程序顶部的调整参数,我用于此目的的是:19, 19, 4, 4, 3, 10, 11, 1000, 1000。我还注释掉了 number_signed 和 code 的第一个定义,并取消注释掉它们的最后一个定义以选择 CJK 统一字符集。

Alright, here's mine: nanocrunch.cpp and the CMakeLists.txt file to build it using CMake. It relies on the Magick++ ImageMagick API for most of its image handling. It also requires the GMP library for bignum arithmetic for its string encoding.

I based my solution off of fractal image compression, with a few unique twists. The basic idea is to take the image, scale down a copy to 50% and look for pieces in various orientations that look similar to non-overlapping blocks in the original image. It takes a very brute force approach to this search, but that just makes it easier to introduce my modifications.

The first modification is that instead of just looking at ninety degree rotations and flips, my program also considers 45 degree orientations. It's one more bit per block, but it helps the image quality immensely.

The other thing is that storing a contrast/brightness adjustment for each of color component of each block is way too expensive. Instead, I store a heavily quantized color (the palette has only 4 * 4 * 4 = 64 colors) that simply gets blended in in some proportion. Mathematically, this is equivalent to a variable brightness and constant contrast adjustment for each color. Unfortunately, it also means there's no negative contrast to flip the colors.

Once it's computed the position, orientation and color for each block, it encodes this into a UTF-8 string. First, it generates a very large bignum to represent the data in the block table and the image size. The approach to this is similar to Sam Hocevar's solution -- kind of a large number with a radix that varies by position.

Then it converts that into a base of whatever the size of the character set available is. By default, it makes full use of the assigned unicode character set, minus the less than, greater than, ampersand, control, combining, and surrogate and private characters. It's not pretty but it works. You can also comment out the default table and select printable 7-bit ASCII (again excluding <, >, and & characters) or CJK Unified Ideographs instead. The table of which character codes are available is stored a run-length encoded with alternating runs of invalid and valid characters.

Anyway, here are some images and times (as measured on my old 3.0GHz P4), and compressed to 140 characters in the full assigned unicode set described above. Overall, I'm fairly pleased with how they all turned out. If I had more time to work on this, I'd probably try to reduce the blockiness of the decompressed images. Still, I think the results are pretty good for the extreme compression ratio. The decompressed images are bit impressionistic, but I find it relatively easy to see how bits correspond to the original.

Stack Overflow Logo (8.6s to encode, 7.9s to decode, 485 bytes):

http://i44.tinypic.com/2w7lok1.png

http://i44.tinypic.com/2w7lok1.png

Lena (32.8s to encode, 13.0s to decode, 477 bytes):

http://i42.tinypic.com/2rr49wg.png http://i40.tinypic.com/2rhxxyu.png

Mona Lisa (43.2s to encode, 14.5s to decode, 490 bytes):

http://i41.tinypic.com/ekgwp3.png http://i43.tinypic.com/ngsxep.png

Edit: CJK Unified Characters

Sam asked in the comments about using this with CJK. Here's a version of the Mona Lisa compressed to 139 characters from the CJK Unified character set:

http://i43.tinypic.com/2yxgdfk.png

咏璘驞凄脒鵚据蛥鸂拗朐朖辿韩瀦魷歪痫栘璯緍脲蕜抱揎頻蓼債鑡嗞靊寞柮嚛嚵籥聚隤慛絖銓馿渫櫰矍昀鰛掾撄粂敽牙稉擎蔍螎葙峬覧絀蹔抆惫冧笻哜搀澐芯譶辍澮垝黟偞媄童竽梀韠镰猳閺狌而羶喙伆杇婣唆鐤諽鷍鴞駫搶毤埙誖萜愿旖鞰萗勹鈱哳垬濅鬒秀瞛洆认気狋異闥籴珵仾氙熜謋繴茴晋髭杍嚖熥勳縿餅珝爸擸萿

The tuning parameters at the top of the program that I used for this were: 19, 19, 4, 4, 3, 10, 11, 1000, 1000. I also commented out the first definition of number_assigned and codes, and uncommented out the last definitions of them to select the CJK Unified character set.

我的完整解决方案可以在 http://caca.zoy.org/wiki/img2twit。 它具有以下功能:

这里是一个粗略的概述编码过程:

是解码过程:

我认为该程序最原始的部分是比特流。 我不是打包位对齐值(

stream <<= shift;stream |= value),而是打包不在二次方范围内的任意值(stream *=范围;流+=值)。 这需要 bignum 计算,当然速度要慢很多,但当使用 20902 个主要 CJK 字符时,它会给出 2009.18 位而不是 1960 位(这是我可以在数据中放入的另外三个点)。 当使用 ASCII 时,它给了我 917.64 位而不是 840。我决定不使用需要重型武器(角点检测、特征提取、颜色量化...)的初始图像计算方法,因为我不确定首先,它确实有帮助。 现在我意识到收敛速度很慢(1 分钟是可以接受的,但仍然很慢),我可能会尝试改进这一点。

主要拟合循环大致受直接二元搜索抖动算法启发(其中像素随机交换或翻转,直到获得更好的半色调)。 能量计算是一个简单的均方根距离,但我首先对原始图像执行 5x5 中值滤波器。 高斯模糊可能会更好地代表人眼的行为,但我不想失去锐利的边缘。 我还决定不使用模拟退火或其他难以调整的方法,因为我没有几个月的时间来校准过程。 因此,“质量”标志仅表示编码器结束之前对每个点执行的迭代次数。

并非所有图像压缩效果都很好,我对结果感到惊讶,我真的想知道还有什么其他方法可以将图像压缩到 250 字节。

我还有关于编码器状态从随机初始状态演变的小电影从随机初始状态和从“良好”的初始状态。

编辑:以下是压缩方法与 JPEG 的比较。 左边是jamoes 上面的536 字节图片。 右侧,蒙娜丽莎使用此处描述的方法压缩到 534 字节(此处提到的字节指的是数据字节,因此忽略使用 Unicode 字符浪费的位):

编辑:刚刚用最新版本的图像替换了 CJK 文本。

My full solution can be found at http://caca.zoy.org/wiki/img2twit. It has the following features:

Here is a rough overview of the encoding process:

And this is the decoding process:

What I believe is the most original part of the program is the bitstream. Instead of packing bit-aligned values (

stream <<= shift; stream |= value), I pack arbitrary values that are not in power-of-two ranges (stream *= range; stream += value). This requires bignum computations and is of course a lot slower, but it gives me 2009.18 bits instead of 1960 when using the 20902 main CJK characters (that's three more points I can put in the data). And when using ASCII, it gives me 917.64 bits instead of 840.I decided against a method for the initial image computation that would have required heavy weaponry (corner detection, feature extraction, colour quantisation...) because I wasn't sure at first it would really help. Now I realise convergence is slow (1 minute is acceptable but it's slow nonetheless) and I may try to improve on that.

The main fitting loop is loosely inspired from the Direct Binary Seach dithering algorithm (where pixels are randomly swapped or flipped until a better halftone is obtained). The energy computation is a simple root-mean-square distance, but I perform a 5x5 median filter on the original image first. A Gaussian blur would probably better represent the human eye behaviour, but I didn't want to lose sharp edges. I also decided against simulated annealing or other difficult to tune methods because I don't have months to calibrate the process. Thus the "quality" flag just represents the number of iterations that are performed on each point before the encoder ends.

Even though not all images compress well, I'm surprised by the results and I really wonder what other methods exist that can compress an image to 250 bytes.

I also have small movies of the encoder state's evolution from a random initial state and from a "good" initial state.

Edit: here is how the compression method compares with JPEG. On the left, jamoes's above 536-byte picture. On the right, Mona Lisa compressed down to 534 bytes using the method described here (the bytes mentioned here refer to data bytes, therefore ignoring bits wasted by using Unicode characters):

Edit: just replaced CJK text with the newest versions of the images.

以下不是正式提交,因为我的软件没有以任何方式针对指定任务进行定制。 DLI 可以被描述为一种优化的通用有损图像编解码器。 它是图像压缩领域的 PSNR 和 MS-SSIM 记录保持者,我认为看看它在这一特定任务中的表现会很有趣。 我使用了提供的参考《蒙娜丽莎》图像,并将其缩小到 100x150,然后使用 DLI 将其压缩到 344 字节。

Mona Lisa DLI http://i40.tinypic.com/2md5q4m.png

为了与 JPEG 和 IMG2TWIT 压缩样本进行比较,我也使用 DLI 将图像压缩到 534 字节。 JPEG 为 536 字节,IMG2TWIT 为 534 字节。 为了便于比较,图像已放大至大致相同的尺寸。 左侧图像为 JPEG,中间图像为 IMG2TWIT,右侧图像为 DLI。

对比http://i42.tinypic.com/302yjdg.png

DLI图像设法保留了一些面部特征,最引人注目的是著名的微笑:)。

The following isn't a formal submission, since my software hasn't been tailored in any way for the indicated task. DLI can be described as an optimizing general purpose lossy image codec. It's the PSNR and MS-SSIM record holder for image compression, and I thought it would be interesting to see how it performs for this particular task. I used the reference Mona Lisa image provided and scaled it down to 100x150 then used DLI to compress it to 344 bytes.

Mona Lisa DLI http://i40.tinypic.com/2md5q4m.png

For comparison with the JPEG and IMG2TWIT compressed samples, I used DLI to compress the image to 534 bytes as well. The JPEG is 536 bytes and IMG2TWIT is 534 bytes. Images have been scaled up to approximately the same size for easy comparison. JPEG is the left image, IMG2TWIT is center, and DLI is the right image.

Comparison http://i42.tinypic.com/302yjdg.png

The DLI image manages to preserve some of the facial features, most notably the famous smile :).

我的解决方案的总体概述是:

我知道您要求提供代码,但我真的不想花时间实际编写代码。 我认为有效的设计至少可以激发其他人对此进行编码。

我认为我提出的解决方案的主要好处是它尽可能多地重用现有技术。 尝试编写一个好的压缩算法可能很有趣,但肯定会有更好的算法,很可能是由拥有高等数学学位的人编写的。

另一个重要的注意事项是,如果确定 utf16 是首选编码,那么该解决方案就会崩溃。 jpeg 在压缩到 280 字节时实际上不起作用。 尽管如此,对于这个特定的问题陈述,也许有比 jpg 更好的压缩算法。

The general overview of my solution would be:

I know that you were asking for code, but I don't really want to spend the time to actually code this up. I figured that an efficient design might at least inspire someone else to code this up.

I think the major benefit of my proposed solution is that it is reusing as much existing technology as possible. It may be fun to try to write a good compression algorithm, but there is guaranteed to be a better algorithm out there, most likely written by people who have a degree in higher math.

One other important note though is that if it is decided that utf16 is the preferred encoding, then this solution falls apart. jpegs don't really work when compressed down to 280 bytes. Although, maybe there is a better compression algorithm than jpg for this specific problem statement.

好吧,我迟到了,但尽管如此,我还是完成了我的项目。

这是一种玩具遗传算法,使用半透明彩色圆圈来重新创建初始图像。

特点:

缺点:

这是代表 Lena 的一个示例:

犭杨谷杌蒝螦界匘玏扝匮俄归晃客猘摈硰划刀萕码担斢嘁蜁哭耂澹簜偾砠偑妇内团揕忈义倨峡凁梡若掂戆耔攋斘眐奡萛狂昸箆亲嬎廙栃兡塅受橯恰应戞优猫僘莹吱赜卣朸杈腠綍蝘猕屐称悡诟来此压罍尕熚帤叩虤嫐虲兙罨縨炘列叁凿堃从弅慌螎熰标宑笛柢橙拃丨蜊缩昔傥舭励癳冂囤璟彔榕兠摈侑蒖孂埮潘姠璐哠眛嫡琠枀訜暬厇廪焛瀻严啘刱垫仔

代码位于 bitbucket.org 的 Mercurial 存储库中。 查看 http://bitbucket.org/tkadlubo/circles.lua

Okay, I'm late to the game, but nevertheless I made my project.

It's a toy genetic algorithm that uses translucent colorful circles to recreate the initial image.

Features:

Mis-feautres:

Here's an example twit that represents Lena:

犭楊谷杌蒝螦界匘玏扝匮俄归晃客猘摈硰划刀萕码摃斢嘁蜁嚎耂澹簜僨砠偑婊內團揕忈義倨襠凁梡岂掂戇耔攋斘眐奡萛狂昸箆亲嬎廙栃兡塅受橯恰应戞优猫僘瑩吱賾卣朸杈腠綍蝘猕屐稱悡詬來噩压罍尕熚帤厥虤嫐虲兙罨縨炘排叁抠堃從弅慌螎熰標宑簫柢橙拃丨蜊缩昔儻舭勵癳冂囤璟彔榕兠摈侑蒖孂埮槃姠璐哠眛嫡琠枀訜苄暬厇廩焛瀻严啘刱垫仔

The code is in a Mercurial repository at bitbucket.org. Check out http://bitbucket.org/tkadlubo/circles.lua

以下是我解决这个问题的方法,我必须承认这是一个非常有趣的项目,它绝对超出了我的正常工作范围,并且给了我一些新的知识。

我的基本思想如下:

证明,这确实有效,但仅限于有限的范围,正如您从下面的示例图像中看到的那样。 在输出方面,以下是示例推文,专门针对示例中显示的 Lena 图像。

如你所见,我确实尝试对字符集进行一些限制; 但是,在存储图像颜色数据时,我遇到了这样做的问题。 此外,这种编码方案还往往会浪费大量可用于附加图像信息的数据位。

就运行时间而言,对于小图像,代码非常快,所提供的示例图像约为 55 毫秒,但时间确实随着图像的增大而增加。 对于 512x512 Lena 参考图像,运行时间为 1182 毫秒。 我应该注意到,代码本身对于性能并没有非常优化的可能性很大(例如,所有内容都作为 位图),因此经过一些重构后时间可能会缩短一些。

请随时向我提供任何关于我可以做得更好或代码可能有问题的建议。 运行时间和示例输出的完整列表可以在以下位置找到:http://code-zen.info/twitterimage/

更新一个

我已经更新了压缩推文字符串时使用的 RLE 代码以进行基本的回顾,如果是这样,则将其用于输出。 这只适用于数值对,但它确实保存了几个字符的数据。 运行时间和图像质量或多或少相同,但推文往往要小一些。 完成测试后,我将更新网站上的图表。 以下是示例推文字符串之一,同样适用于 Lena 的小版本:

更新两个

更新,但我修改了代码,将颜色色调打包为三个组而不是四个组,这使用了更多空间,但除非我遗漏了某些内容,否则它应该意味着“奇数”字符不再出现在颜色数据所在的位置。 另外,我对压缩进行了更多更新,因此它现在可以作用于整个字符串,而不仅仅是颜色计数块。 我仍在测试运行时间,但它们似乎名义上有所改善; 然而,图像质量仍然相同。 以下是莉娜推文的最新版本:

StackOverflow 徽标 http://code-zen.info/twitterimage/images/stackoverflow-logo.bmp 康奈尔盒子 http://code-zen.info/twitterimage/images/cornell-box。 bmp 莉娜 http://code-zen.info/twitterimage/images/lena.bmp 蒙娜丽莎 http://code-zen.info/twitterimage/images/mona-lisa.bmp

The following is my approach to the problem and I must admit that this was quite an interesting project to work on, it is definitely outside of my normal realm of work and has given me a something new to learn about.

The basic idea behind mine is as follows:

It turns out that this does work, but only to a limited extent as you can see from the sample images below. In terms of output, what follows is a sample tweet, specifically for the Lena image shown in the samples.

As you can see, I did try and constrain the character set a bit; however, I ran into issues doing this when storing the image color data. Also, this encoding scheme also tends to waste a bunch of bits of data that could be used for additional image information.

In terms of run times, for small images the code is extremely fast, about 55ms for the sample images provided, but the time does increase with larger images. For the 512x512 Lena reference image the running time was 1182ms. I should note that the odds are pretty good that the code itself isn't very optimized for performance (e.g. everything is worked with as a Bitmap) so the times could go down a bit after some refactoring.

Please feel free to offer me any suggestions on what I could have done better or what might be wrong with the code. The full listing of run times and sample output can be found at the following location: http://code-zen.info/twitterimage/

Update One

I've updated the the RLE code used when compressing the tweet string to do a basic look back and if so so use that for the output. This only works for the number value pairs, but it does save a couple of characters of data. The running time is more or less the same as well as the image quality, but the tweets tend to be a bit smaller. I will update the chart on the website as I complete the testing. What follows is one of the example tweet strings, again for the small version of Lena:

Update Two

Another small update, but I modified the code to pack the color shades into groups of three as opposed to four, this uses some more space, but unless I'm missing something it should mean that "odd" characters no longer appear where the color data is. Also, I updated the compression a bit more so it can now act upon the entire string as opposed to just the color count block. I'm still testing the run times, but they appear to be nominally improved; however, the image quality is still the same. What follows is the newest version of the Lena tweet:

StackOverflow Logo http://code-zen.info/twitterimage/images/stackoverflow-logo.bmp Cornell Box http://code-zen.info/twitterimage/images/cornell-box.bmp Lena http://code-zen.info/twitterimage/images/lena.bmp Mona Lisa http://code-zen.info/twitterimage/images/mona-lisa.bmp

Roger Alsing 编写的遗传算法具有良好的压缩比,但代价是压缩时间较长。 可以使用有损或无损算法进一步压缩所得的顶点向量。

http://rogeralsing.com/2008/12 /07/genic-programming-evolution-of-mona-lisa/

将是一个有趣的程序来实现,但我会错过它。

This genetic algorithm that Roger Alsing wrote has a good compression ratio, at the expense of long compression times. The resulting vector of vertices could be further compressed using a lossy or lossless algorithm.

http://rogeralsing.com/2008/12/07/genetic-programming-evolution-of-mona-lisa/

Would be an interesting program to implement, but I'll give it a miss.

在最初的挑战中,大小限制被定义为如果您将文本粘贴到 Twitter 的文本框中并按“更新”,Twitter 仍然允许您发送的内容。 正如一些人正确地注意到的那样,这与您可以通过手机发送的短信不同。

没有明确提到的(但我个人的规则是)是,您应该能够在浏览器中选择推文消息,将其复制到剪贴板并将其粘贴到解码器的文本输入字段中,以便解码器可以显示它。 当然,您也可以将消息保存为文本文件并将其读回或编写一个工具来访问 Twitter API 并过滤掉任何看起来像图像代码的消息(任何人都有特殊标记?wink 眨眼)。 但规则是,该消息必须先通过 Twitter,然后才能被允许解码。

祝 350 字节好运 - 我怀疑您是否能够使用它们。

In the original challenge the size limit is defined as what Twitter still allows you to send if you paste your text in their textbox and press "update". As some people correctly noticed this is different from what you could send as a SMS text message from your mobile.

What is not explictily mentioned (but what my personal rule was) is that you should be able to select the tweeted message in your browser, copy it to the clipboard and paste it into a text input field of your decoder so it can display it. Of course you are also free to save the message as a text file and read it back in or write a tool which accesses the Twitter API and filters out any message that looks like an image code (special markers anyone? wink wink). But the rule is that the message has to have gone through Twitter before you are allowed to decode it.

Good luck with the 350 bytes - I doubt that you will be able to make use of them.

发布单色或灰度图像应该会提高可以编码到该空间中的图像的大小,因为您不关心颜色。

可能会增加上传三张图像的挑战,这些图像重新组合后会为您提供全彩色图像,同时仍然在每个单独的图像中保持单色版本。

在上面添加一些压缩,它可能开始看起来可行......

很好! 现在你们引起了我的兴趣。 这一天剩下的时间不会做任何工作...

Posting a Monochrome or Greyscale image should improve the size of the image that can be encoded into that space since you don't care about colour.

Possibly augmenting the challenge to upload three images which when recombined give you a full colour image while still maintaining a monochrome version in each separate image.

Add some compression to the above and It could start looking viable...

Nice!!! Now you guys have piqued my interest. No work will be done for the rest of the day...

关于本次挑战的编码/解码部分。

base16b.org 是我尝试指定一种标准方法,用于在更高的 Unicode 平面中安全有效地编码二进制数据。

一些功能:

抱歉,这个答案对于原始竞赛来说来得太晚了。 我独立于这篇文章开始了这个项目,我是在写到一半的时候发现的。

Regarding the encoding/decoding part of this challenge.

base16b.org is my attempt to specify a standard method for safely and efficiently encoding binary data in the higher Unicode planes.

Some features :

Sorry, this answer comes way too late for the original competition. I started the project independently of this post, which I discovered half-way into it.

存储一堆参考图像的想法很有趣。 存储 25Mb 的样本图像,然后让编码器尝试使用其中的位来合成图像,这会是错误的吗? 对于如此小的管道,两端的机器必然会比通过的数据量大得多,那么 25Mb 的代码、1Mb 的代码和 24Mb 的图像数据之间有什么区别呢?

(请注意,原始指南排除了将输入限制为库中已有图像的可能性 - 我并不是建议这样做)。

The idea of storing a bunch of reference images is interesting. Would it be so wrong to store say 25Mb of sample images, and have the encoder try and compose an image using bits of those? With such a minuscule pipe, the machinery at either end is by necessity going to be much greater than the volume of data passing through, so what's the difference between 25Mb of code, and 1Mb of code and 24Mb of image data?

(note the original guidelines ruled out restricting the input to images already in the library - I'm not suggesting that).

愚蠢的想法,但是

sha1(my_image)会产生任何图像的“完美”表示(忽略碰撞)。 明显的问题是解码过程需要大量的暴力破解。1位单色会更容易一些。每个像素变成 1 或 0,因此对于 100*100 像素图像,您将拥有 1000 位数据。 由于 SHA1 哈希值是 41 个字符,因此我们可以将三个字符放入一条消息中,只需暴力破解 2 组 3333 位和一组 3334 位(尽管即使这样可能仍然不合适),

这并不完全实用。 即使使用固定长度的 1 位 100*100px 图像,假设我没有计算错误,也有 49995000 个组合,或者分成三部分时有 16661667 个组合。

Stupid idea, but

sha1(my_image)would result in a "perfect" representation of any image (ignoring collisions). The obvious problem is the decoding process requires inordinate amounts of brute-forcing..1-bit monochrome would be a bit easier.. Each pixel becomes a 1 or 0, so you would have 1000 bits of data for a 100*100 pixel image. Since the SHA1 hash is 41 characters, we can fit three into one message, only have to brute force 2 sets of 3333 bits and one set of 3334 (although even that is probably still inordinate)

It's not exactly practical. Even with the fixed-length 1-bit 100*100px image there is.., assuming I'm not miscalculating, 49995000 combinations, or 16661667 when split into three.

在这里,这种压缩效果很好。

http://www.intuac.com/userport/john/apt/

< a href="http://img86.imageshack.us/img86/4169/imagey.jpg" rel="nofollow noreferrer">http://img86.imageshack.us/img86/4169/imagey.jpg http:// img86.imageshack.us/img86/4169/imagey.jpg

我使用了以下批处理文件:

生成的文件大小为 559 字节。

Here this compression is good.

http://www.intuac.com/userport/john/apt/

http://img86.imageshack.us/img86/4169/imagey.jpg http://img86.imageshack.us/img86/4169/imagey.jpg

I used the following batch file:

The resulting filesize is 559 bytes.

想法:你可以使用字体作为调色板吗? 尝试将图像分解为一系列向量,尝试用向量集的组合来描述它们(每个字符本质上是一组向量)。 这是使用字体作为字典。 例如,我可以使用 al 表示垂直线,使用 - 表示水平线吗? 只是一个想法。

Idea: Could you use a font as a palette? Try to break an image in a series of vectors trying to describe them with a combination of vector sets (each character is essentially a set of vectors). This is using the font as a dictionary. I could for instance use a l for a vertical line and a - for a horizontal line? Just an idea.